Small tests to yield big answers on what influences LLMs

Undoubtedly, one of the hot topics in SEO over the last few months has been how to influence LLM answers. Every SEO is trying to come up with strategies. Many have created their own tools using “vibe coding,” where they test their hypotheses and engage in heated debates about what each LLM and Google use to pick their sources.

Some of these debates can get very technical, touching on topics like vector embeddings, passage ranking, retrieval-augmented generation (RAG), and chunking. These theories are great—there’s a lot to learn from them and turn into practice.

However, if some of these AI concepts are going way over your head, let’s take a step back. I’ll walk you through some recent tests I’ve run to help you gain an understanding of what’s going on in AI search without feeling overwhelmed so you can start optimizing for these new platforms.

Create branded content and check for results

A while ago, I went to Austin, Texas, for a business outing. Before the trip, I wondered if I could “teach” ChatGPT about my upcoming travels. There was no public information about the trip on the web, so it was a completely clean test with no competition.

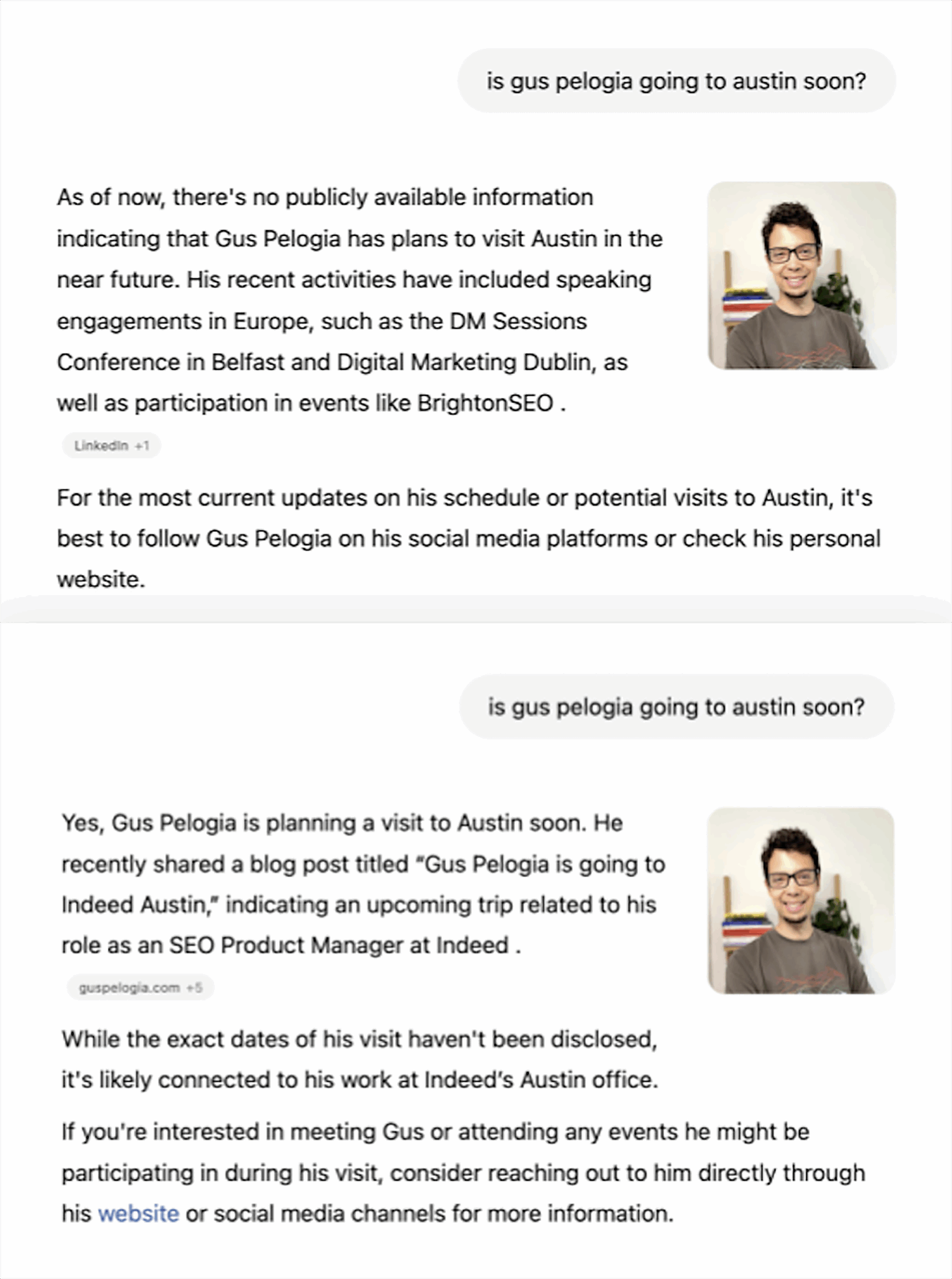

I asked ChatGPT, “is Gus Pelogia going to Austin soon?” The initial answer was what you’d expect: He doesn’t have any trips planned to Austin.

That same day, a few hours later, I wrote a blog post on my website about my trip to Austin. Six hours after I published the post, ChatGPT’s answer changed: Yes, Gus IS going to Austin to meet his work colleagues.

ChatGPT prompts with a blog post published in between queries, which was enough to change a ChatGPT answer.

ChatGPT used an AI framework called RAG (Retrieval Augmented Generation) to fetch the latest result. Basically, it didn’t have enough knowledge about this information in its training data, so it scanned the web to look for an up-to-date answer.

Interestingly enough, it took a few days until the actual blog post with detailed information was found by ChatGPT. Initially, ChatGPT had found a snippet of the new blog post on my homepage and reindexed the page within the six-hour range. It was using just the blog post’s page title to change its answer before actually “seeing” the whole content days later.

Some learnings from this experiment:

- New information on webpages reaches ChatGPT answers in a matter of hours, even for small websites. Don’t think your website is too small or insignificant to get noticed by LLMs—they’ll notice when you add new content or refresh existing pages, so it’s important to have an ongoing brand content strategy.

- The answers in ChatGPT are highly dependent on the content published on your website. This is especially true for new companies where there are limited sources of information. ChatGPT didn’t confirm that I had upcoming travel until it fetched the information from my blog post detailing the trip.

- Use your webpages to optimize how your brand is portrayed beyond showing up in competitive keywords for search. This is your opportunity to promote a certain USP or brand tagline. For instance, “The Leading AI-Powered Marketing Platform” and “See everyday moments from your close friends” are used, respectively, by Semrush and Instagram on their homepages. While users probably aren’t searching for these keywords, it’s still an opportunity for brand positioning that will resonate with them.

Built for how people search today. Track your brand across Google rankings and AI search in one place.

Test to see if ChatGPT is using Bing or Google’s index

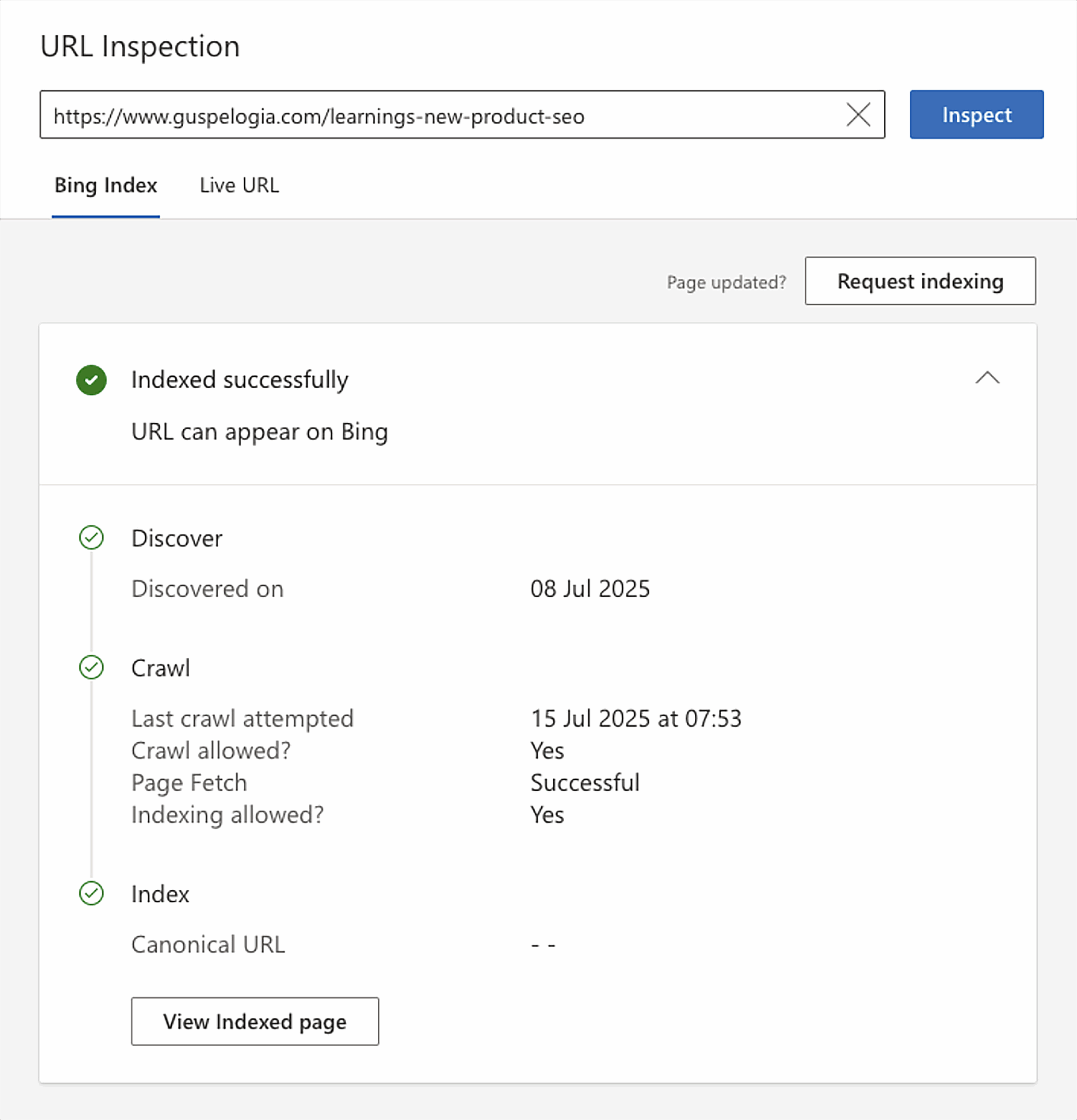

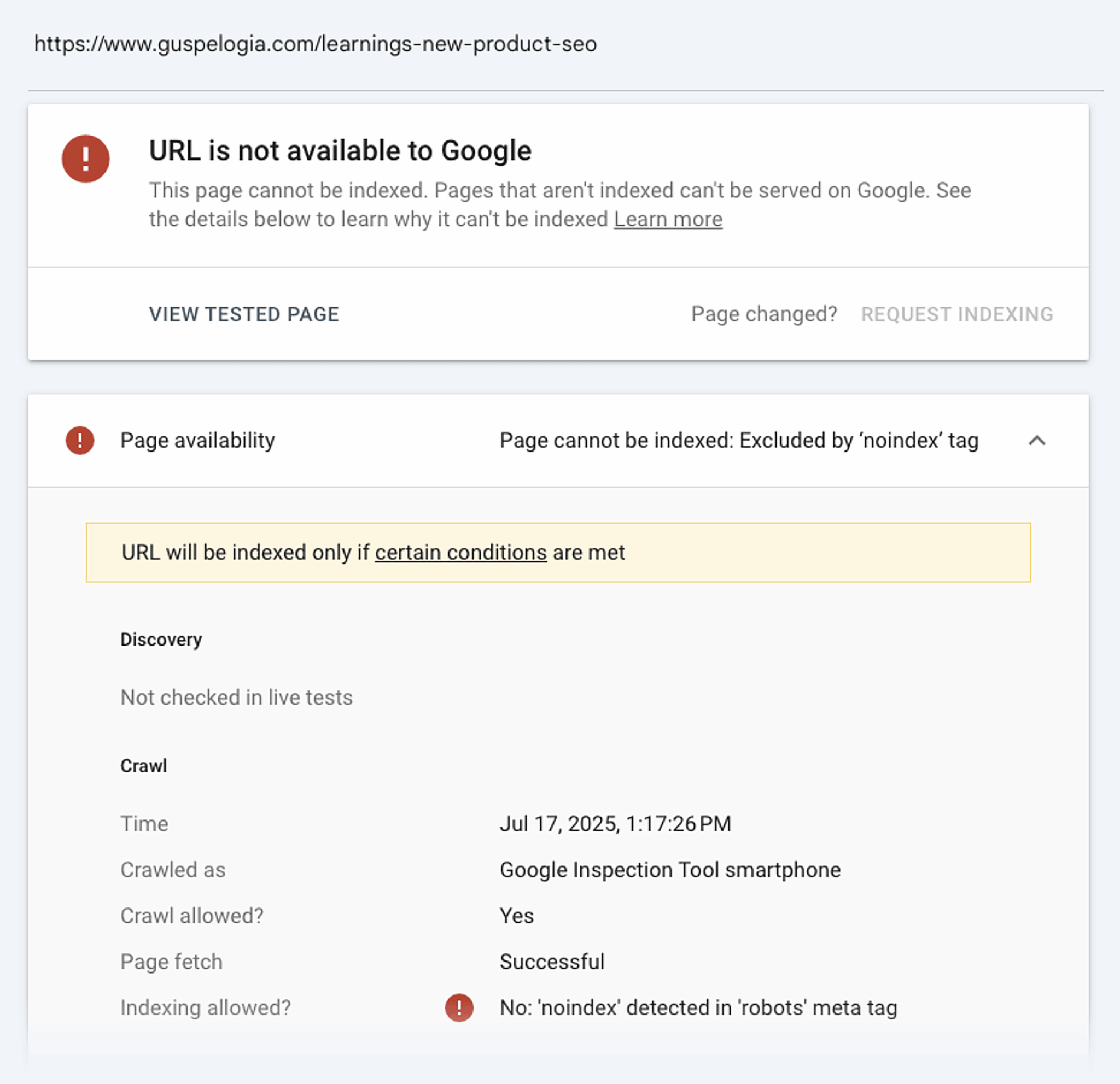

The industry has been ringing alarm bells about whether ChatGPT uses Google’s index instead of Bing. So I ran another small test to find out: I added a <meta name=”googlebot” content=”noindex”> tag on the blog post, allowing only Bingbot for nine days.

If ChatGPT is using Bing’s index, it should find my new page when I prompt about it. Again, this was on a new topic and the prompt specifically asked for an article I wrote, so there wouldn’t be any doubts about what source to show.

The page got indexed by Bing after a couple of days, while Google wasn’t allowed to see it.

New article has been indexed by Bingbot

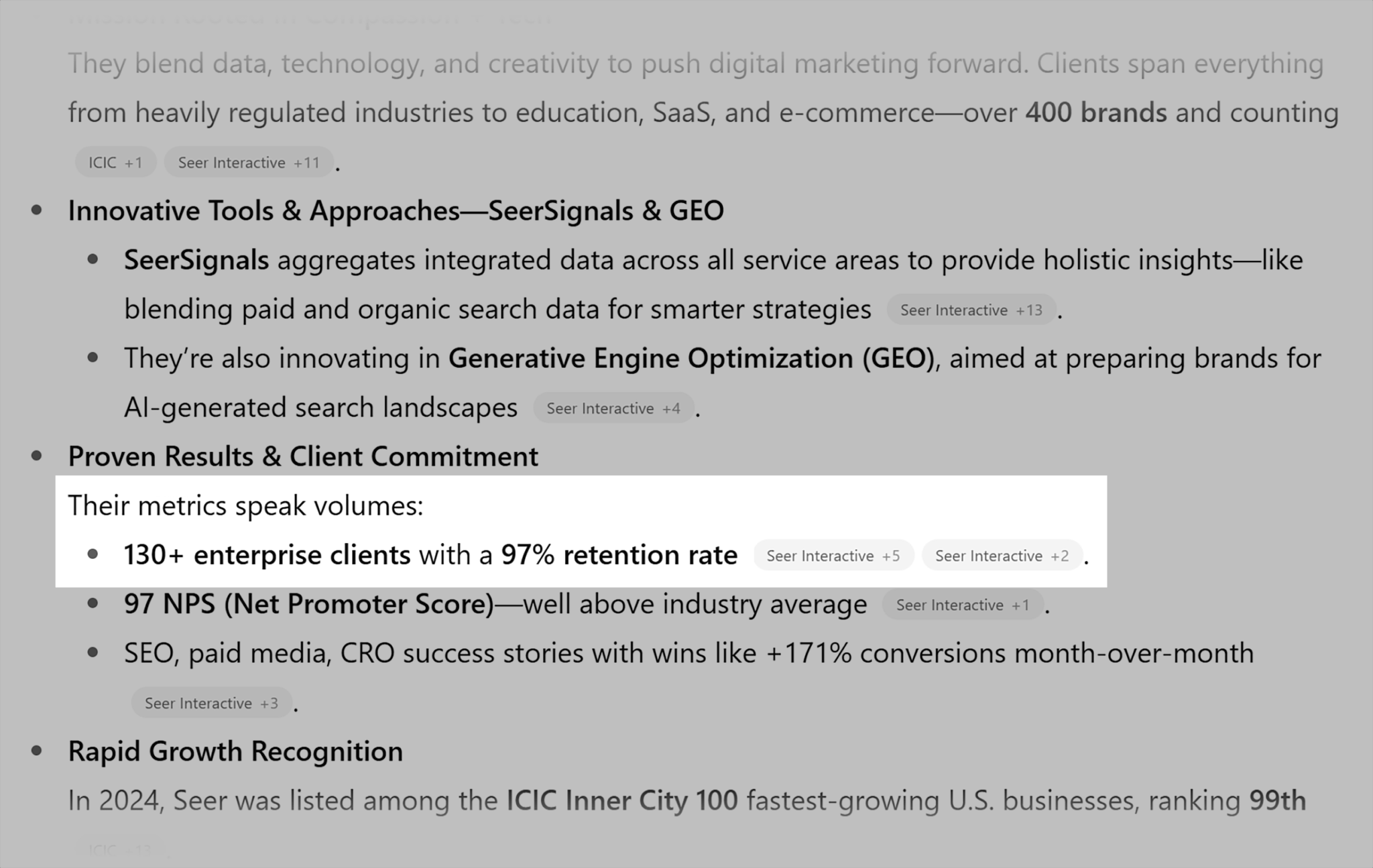

I kept asking ChatGPT, with multiple prompt variations, if it could find my new article. For nine days, nothing changed—it couldn’t find the article. It got to a point that ChatGPT hallucinated (actually, tried its best guess) a URL.

ChatGPT made-up URL: https://www.guspelogia.com/learnings-from-building-a-new-product-as-an-seo

Real URL: https://www.guspelogia.com/learnings-new-product-seo

GSC shows that it can’t index the page due to “noindex” tag

I eventually gave up and allowed Googlebot to index the page. A few hours later, ChatGPT changed its answer and found the correct URL.

On the top, ChatGPT’s answer when Googlebot was blocked. On the bottom, ChatGPT’s answer after Googlebot was allowed to see the page.

Interestingly enough, the link to the article was presented on my homepage and blog pages, yet ChatGPT couldn’t display it. It only found that the blog post existed based on the text on those pages, even though it didn’t follow the link.

Yet, there’s no harm in setting up your website for success on Bing. They’re one of the search engines that adopted IndexNow, a simple ping that informs search engines that a URL’s content has changed. This implementation allows Bing to reflect updates in their search results quickly.

While we all suspect (with evidence) that ChatGPT isn’t using Bing’s index, setting up IndexNow is a low effort task that’s worthwhile.

Change the content on a source used by RAG

Clicks are becoming less important. Instead, being mentioned in sources like Google’s AI Mode is arising as a new KPI for marketing teams. SEOs are testing multiple tactics to “convince” LLMs about a topic. From using LinkedIn Pulse to write about a topic, to controlled experiments with expired domains and hacking sites, in some ways, it feels like old-school SEO is back.

We’re all talking about being included in AI search results, but what happens when a company or product loses a mention on a page? Imagine a specific model of earbuds is removed from a “top budget earbuds” list—would the product lose its mention, or would Google find a new source to back up its AI answer?

While the answer could always be different for each user and each situation, I ran another small test to find out.

In a listicle that mentioned multiple certification courses, I identified one course that was no longer relevant, so I removed mentions of it from multiple pages on the same domain. I did this to keep the content relevant, so measuring the changes in AI Mode was a side effect.

Initially, within the first few days of the course getting removed from the cited URL, it continued to be part of the AI answer for a few pre-determined prompts. Google simply found a new URL in another domain to validate its initial view.

However, within a week, the course disappeared from AI Mode and ChatGPT completely. Basically, even though Google found another URL validating the course listing, because the “original source” (in this case, the listicle) was updated to remove the course, Google (and, by extension, ChatGPT) subsequently updated its results as well.

This experiment suggests that changing the content on the source cited by LLMs can impact the AI results. But take this conclusion with a pinch of salt, as it was a small test with a highly targeted query. I specifically had a prompt combining “domain + courses” so the answer would come from one domain.

Nonetheless, while in the real world it’s unlikely one citation URL would hold all the power, I’d hypothesize that losing a mention on a few high-authority pages would have the side effect of losing the mention in an AI answer.

Test small, then scale

Tests in small and controlled environments are important for learning and give confidence that your optimization has an effect. Like everything else I do in SEO, I start with an MVP (Minimum Viable Product), learn along the way, and once/if evidence is found, make changes at scale.

Do you want to change the perception of a product on ChatGPT? You won’t get dozens of cited sources to talk about you straight away, so you’d have to reach out to each single source and request a mention. You’ll quickly learn how hard it is to convince these sources to update their content and whether AI optimization becomes a pay-to-play game or if it can be done organically.

Perhaps you’re a source that’s mentioned often when people search for a product, like earbuds. Run your MVPs to understand how much changing your content influences AI answers before you claim your influence at scale, as the changes you make could backfire. For example, what if you stop being a source for a topic due to removing certain claims from your pages?

There’s no set time for these tests to show results. As a general rule, SEOs say results take a few months to appear. In the first test on this article, it took just a few hours to see results.

Running LLM tests with larger websites

Working in large teams or on large websites can be a challenge when doing LLM testing. My suggestion is to create specific initiatives and inform all stakeholders about changes to avoid confusion later, as they might question why these changes are happening.

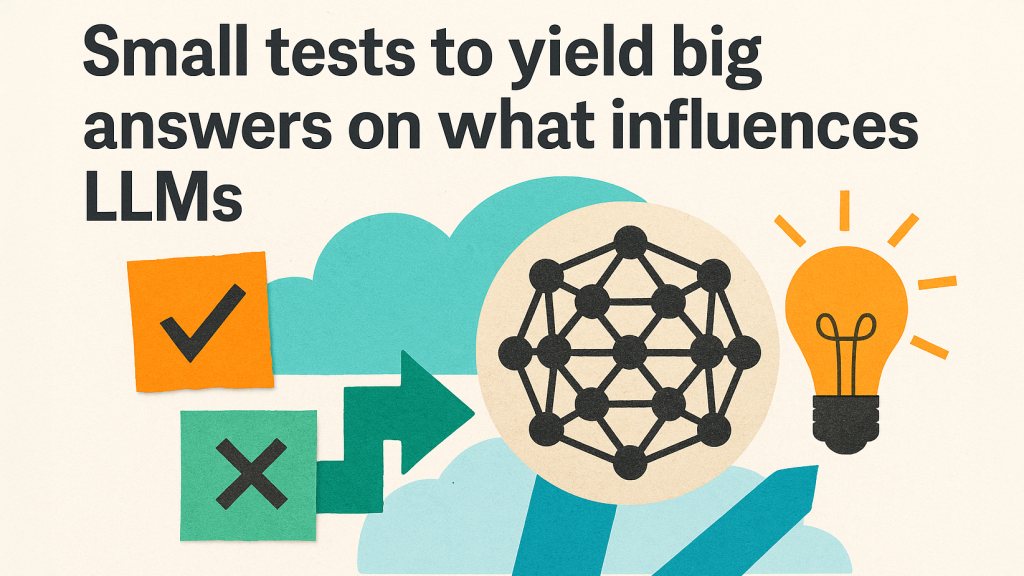

One simple but effective test done by SEER Interactive was to update their footer tagline.

- From: Remote-first, Philadelphia-founded

- To: 130+ Enterprise Clients, 97% Retention Rate

By changing the footer, ChatGPT 5 started mentioning its new tagline within 36 hours for a prompt like “tell me about Seer Interactive.” I’ve checked, and while every time the answer is different, they still mention the “97% retention rate.”

Imagine if you decide to change the content on a number of pages, but someone else has an optimization plan for those same pages. Always run just one test per page, as results will become less reliable if you have multiple variables.

Make sure to research your prompts, have a tracking methodology, and spread the learnings across the company, beyond your SEO counterparts. Everyone is interested in AI right now, all the way up to C-levels.

Another suggestion is to use a tool like Semrush’s AI SEO toolkit to see the key sentiment drivers about a brand. Start with the listed “Areas for Improvement”—this should give you plenty of ideas for tests beyond “SEO Reason,” as it reflects how the brand is perceived beyond organic results.

Checklist: Getting started with LLM optimization

Things are changing fast with AI, and it’s certainly challenging to keep up to date. There’s an overload of content right now, a multitude of claims, and, I dare to say, not even the LLM platforms running them have things fully figured out.

My recommendation is to find the sources you trust (industry news, events, professionals) and run your own tests using the knowledge you have. The results you find for your brands and clients are always more valuable than what others are saying.

It’s a new world of SEO and everyone is trying to figure out what works for them. The best way to follow the curve (or stay ahead of it) is to keep optimizing and documenting your changes.

To wrap it up, here’s a checklist for your LLM optimization:

- Before starting a test, make sure your selected prompts consistently return the answer you expect (such as not mentioning your brand or a feature of your product). Otherwise, the new brand mention or link could be a coincidence, not a result of your work.

- If the same claim is made on multiple pages on your website, update them across the board to increase chances of success

- Use your own website and external sources (e.g., via digital PR) to influence your brand perception. It’s unclear if users will cross-check AI answers or just trust what they’re told.

Recent Comments