Robots.txt & Duplicate Content

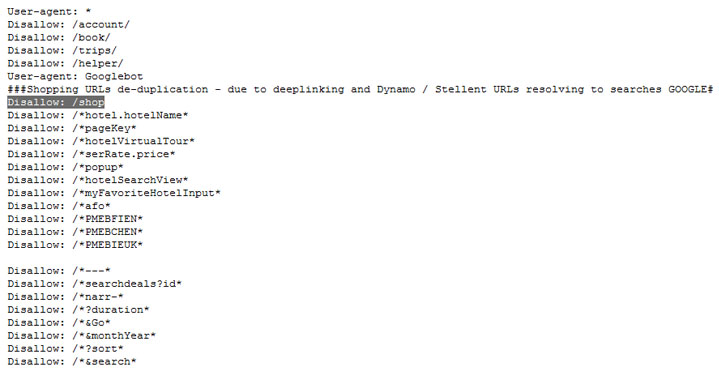

As most SEOs know, the robots.txt file sits in the root of the site, and is a list of instructions for search engines (and other bots, if they adhere to it) to follow. You can use it to specify where your XML Sitemap is, as well as prevent Google and the other search engines from accessing pages that you choose to block.

Every time Googlebot arrives at your site, it will first check to see if you have a robots.txt file. If the robots.txt file blocks any pages, Google won’t crawl them.

For years, website owners and web developers have used the robots.txt file to block Google from accessing duplicate content. From blocking URLs that use tracking parameters, blocking the mobile or print version of sites or just to fix flaws in CMS’s, I’ve seen a lot of duplicate content blocked with robots.txt in my time.

Why blocking URLs doesn’t help

But the robots.txt file is a terrible way to deal with duplicate content. Even if you’re 301 redirecting the duplicate URL to the real one, or using the canonical tag to reference the proper URL, the robots.txt file works against you.

If you have a 301 that redirects to the proper page, but you block the old URL with robots.txt, Google isn’t allowed to crawl that page to see the 301. For example, have a look at Ebooker’s listing for ‘flights’:

![]()

The URL that’s ranking (on page 1 of Google for ‘flights’) is blocked in robots.txt. It’s got no proper snippet because Google can’t see what’s on the page, it’s had a guess at the title based on what other sites have linked to it with. And here’s the reason why Google can’t crawl that URL:

If Ebooker unblocked that URL, Google would be able to crawl it to discover the 301, and the page would most likely have a better chance of ranking higher (as it wouldn’t just appear to be a blank page to the search engines).

If you block Google from seeing a duplicate page, it’s not able to crawl it and see that it’s duplicate. If there’s a canonical tag on that page, it may as well not be there as Google won’t be able to see it. If it redirects elsewhere, Google won’t know.

If you have duplicate content, don’t block the search engines from seeing it. You’ll just prevent the links to those blocked pages from fully counting.

Flickr image from Solo.

Robots.txt & Duplicate Content is a post from: Shark SEO. Have you played The Search Game?

Recent Comments