Indexing and SEO: 9 steps to get your content indexed by Google and Bing

Sick of seeing the error “Discovered – currently not indexed” in Google Search Console (GSC)?

So am I.

Too much SEO effort is focused on ranking.

But many sites would benefit from looking one level up – to indexing.

Why?

Because your content can’t compete until it’s indexed.

Whether the selection system is ranking or retrieval-augmented generation (RAG), your content won’t matter unless it’s indexed.

The same goes for where it appears – traditional SERPs, AI-generated SERPs, Discover, Shopping, News, Gemini, ChatGPT, or whatever AI agents come next.

Without indexing, there’s no visibility, no clicks, and no impact.

And indexing issues are, unfortunately, very common.

Based on my experience working with hundreds of enterprise-level sites, an average of 9% of valuable deep content pages (products, articles, listings, etc.) fail to get indexed by Google and Bing.

So, how do you ensure your deep content gets indexed?

Follow these nine proven steps to accelerate the process and maximize your site’s visibility.

Step 1: Audit your content for indexing issues

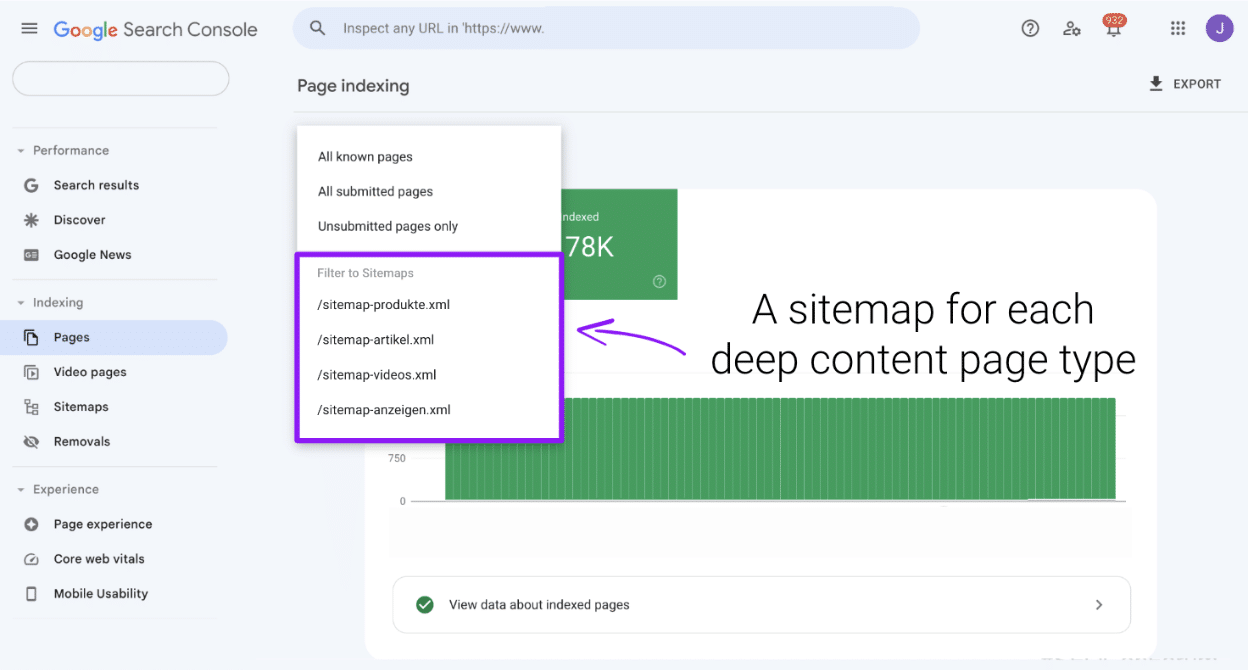

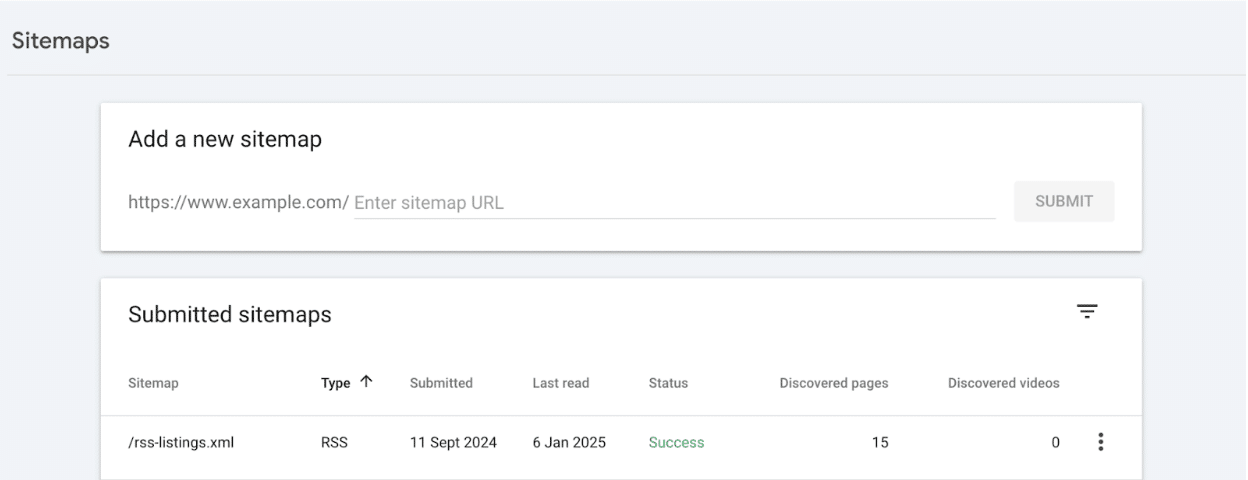

In Google Search Console and Bing Webmaster Tools, submit a separate sitemap for each page type:

- One for products.

- One for articles.

- One for videos.

- And so on.

After submitting a sitemap, it may take a few days to appear in the Pages interface.

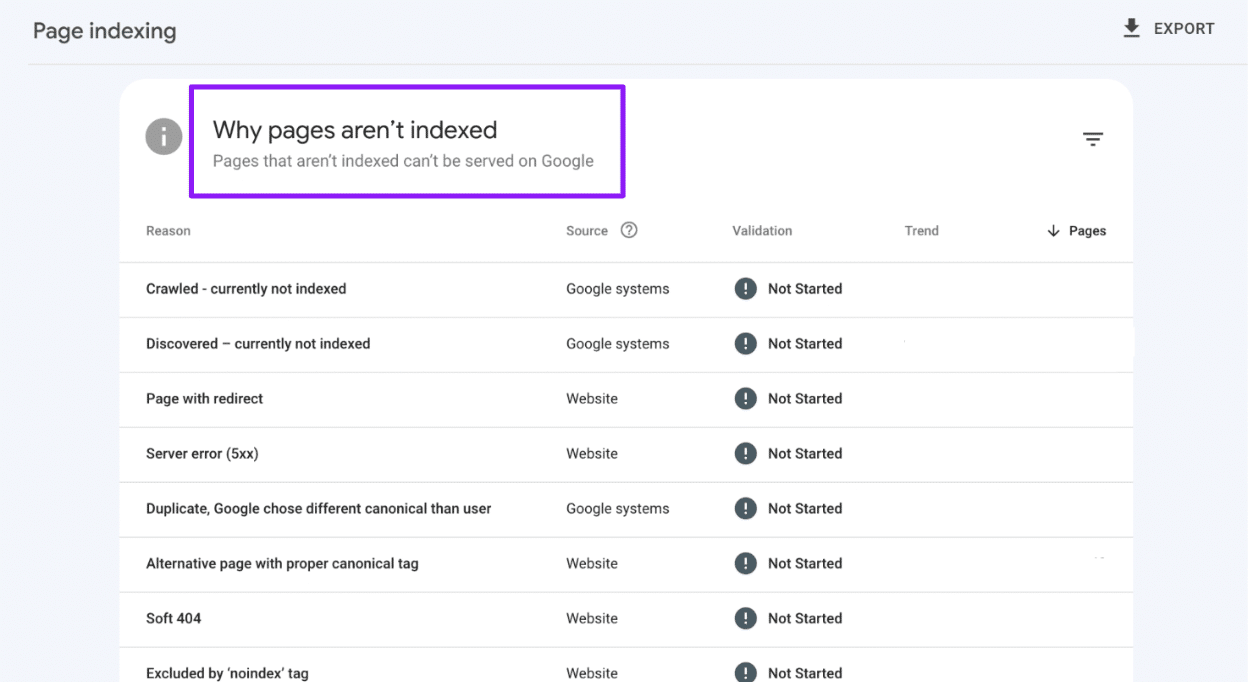

Use this interface to filter and analyze how much of your content has been excluded from indexing and, more importantly, the specific reasons why.

All indexing issues fall into three main categories:

- Poor SEO directives

- These issues stem from technical missteps, such as:

- Pages blocked by robots.txt.

- Incorrect canonical tags.

- Noindex directives.

- 404 errors.

- Or 301 redirects.

- The solution is straightforward: remove these pages from your sitemap.

- These issues stem from technical missteps, such as:

- Low content quality

- If submitted pages are showing soft 404 or content quality issues, first ensure all SEO-relevant content is rendered server-side.

- Once confirmed, focus on improving the content’s value – enhance the depth, relevance, and uniqueness of the page.

- Processing issues

- These are more complex and typically result in exclusions like “Discovered – currently not indexed” or “Crawled – currently not indexed.”

While the first two categories can often be resolved relatively quickly, processing issues demand more time and attention.

By using sitemap indexing data as benchmarks, you can track your progress in improving your site’s indexing performance.

Dig deeper: The 4 stages of search all SEOs need to know

Step 2: Submit a news sitemap for faster article indexing

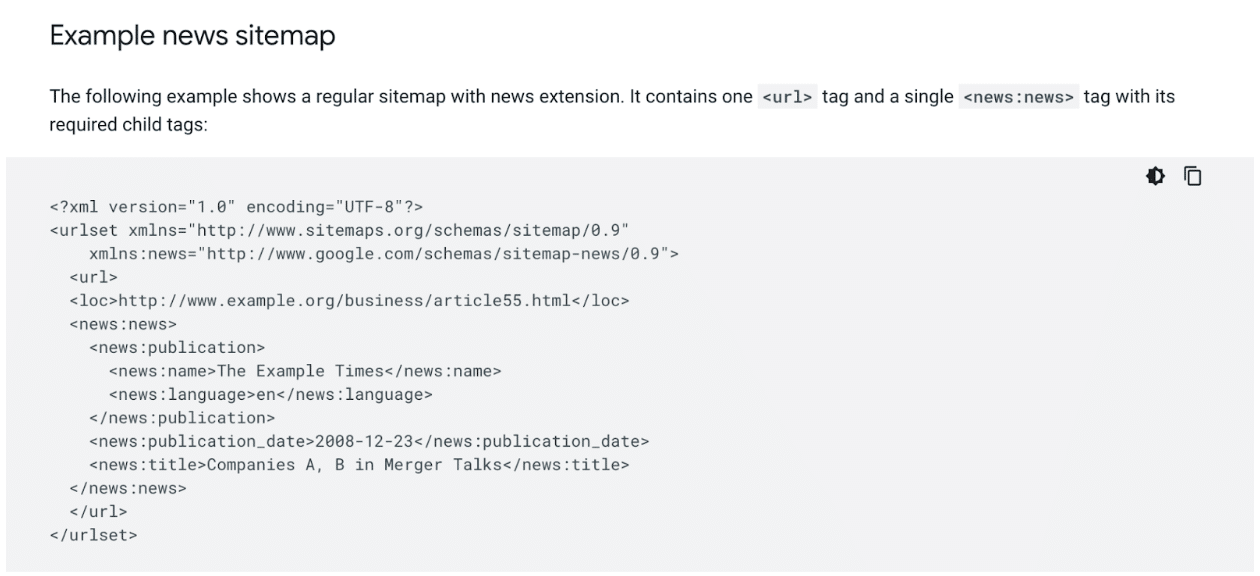

For article indexing in Google, be sure to submit a News sitemap.

This specialized sitemap includes specific tags designed to speed up the indexing of articles published within the last 48 hours.

Importantly, your content doesn’t need to be traditionally “newsy” to benefit from this submission method.

Step 3: Use Google Merchant Center feeds to improve product indexing

While this applies only to Google and specific categories, submitting your products to Google Merchant Center can significantly improve indexing.

Ensure your entire active product catalog is added and kept up to date.

Dig deeper: How to optimize your ecommerce site for better indexing

Step 4: Submit an RSS feed to speed up crawling

Create an RSS feed that includes content published in the last 48 hours.

Submit this feed in the Sitemaps section of both Google Search Console and Bing Webmaster Tools.

This works effectively because RSS feeds, by their nature, are crawled more frequently than traditional XML sitemaps.

Plus, indexers still respond to WebSub pings for RSS feeds – a protocol no longer supported for XML sitemaps.

To maximize benefits, ensure your development team integrates WebSub.

Step 5: Leverage indexing APIs for faster discovery

Integrate both IndexNow (unlimited) and the Google Indexing API (limited to 200 API calls per day unless you can secure a quota increase).

Officially, the Google Indexing API is only for pages with job posting or broadcast event markup.

(Note: The keyword “officially.” I’ll leave it to you to decide if you wish to test it.)

Get the newsletter search marketers rely on.

See terms.

Step 6: Strengthen internal linking to boost indexing signals

The primary way most indexers discover content is through links.

URLs with stronger link signals are prioritized higher in the crawl queue and carry more indexing power.

While external links are valuable, internal linking is the real game-changer for indexing large sites with thousands of deep content pages.

Your related content blocks, pagination, breadcrumbs, and especially the links displayed on your homepage are prime optimization points for Googlebot and Bingbot.

When it comes to the homepage, you can’t link every deep content page – but you don’t need to.

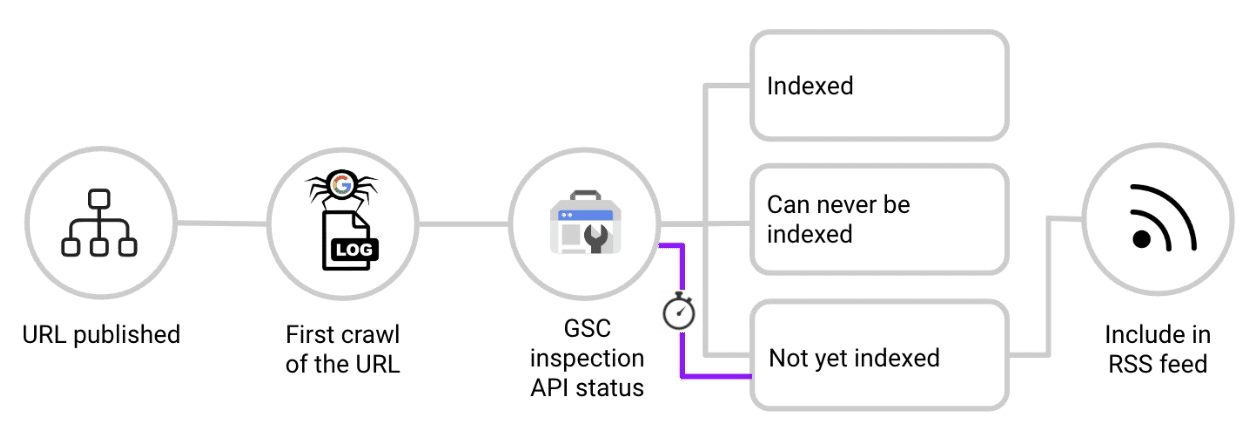

Focus on those that are not yet indexed. Here’s how:

- When a new URL is published, check it against the log files.

- As soon as you see Googlebot crawl the URL for the first time, ping the Google Search Console Inspection API.

- If the response is “URL is unknown to Google,” “Crawled, not indexed,” or “Discovered, not indexed,” add the URL to a dedicated feed that populates a section on your homepage.

- Re-check the URL periodically. Once indexed, remove it from the homepage feed to maintain relevance and focus on other non-indexed content.

This effectively creates a real-time RSS feed of non-indexed content linked from the homepage, leveraging its authority to accelerate indexing.

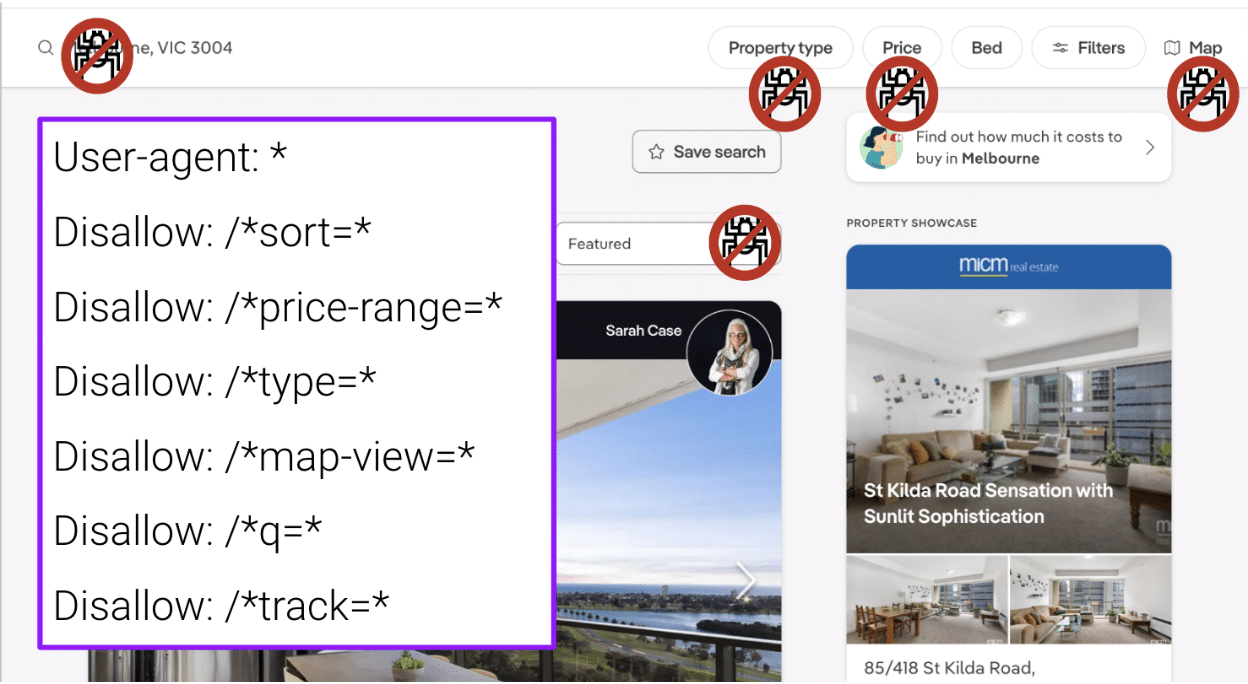

Step 7: Block non-SEO relevant URLs from crawlers

Audit your log files regularly and block high-crawl, no-value URL paths using a robots.txt disallow.

Pages such as faceted navigation, search result pages, tracking parameters, and other irrelevant content can:

- Distract crawlers.

- Create duplicate content.

- Split ranking signals.

- Ultimately downgrade the indexer’s view of your site quality.

However, a robots.txt disallow alone is not enough.

If these pages have internal links, traffic, or other ranking signals, indexers may still index them.

To prevent this:

- In addition to disallowing the route in robots.txt, apply rel=”nofollow” to all possible links pointing to these pages.

- Ensure this is done not only on-site but also in transactional emails and other communication channels to prevent indexers from ever discovering the URL.

Dig deeper: Crawl budget: What you need to know in 2025

Step 8: Use 304 responses to help crawlers prioritize new content

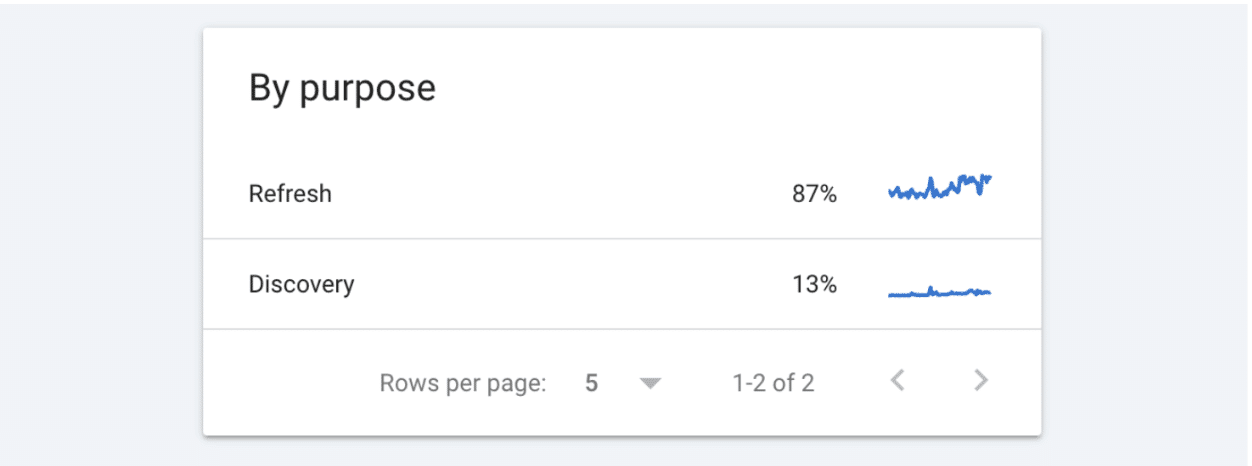

For most sites, the bulk of crawling is invested in refreshing already indexed content.

When a site returns a 200 response code, indexers redownload the content and compare it against their existing cache.

While this is valuable when content has changed, it’s not necessary for most pages.

For content that hasn’t been updated, return a 304 HTTP response code (“Not Modified”).

This tells crawlers the page hasn’t changed, allowing indexers to allocate resources to content discovery instead.

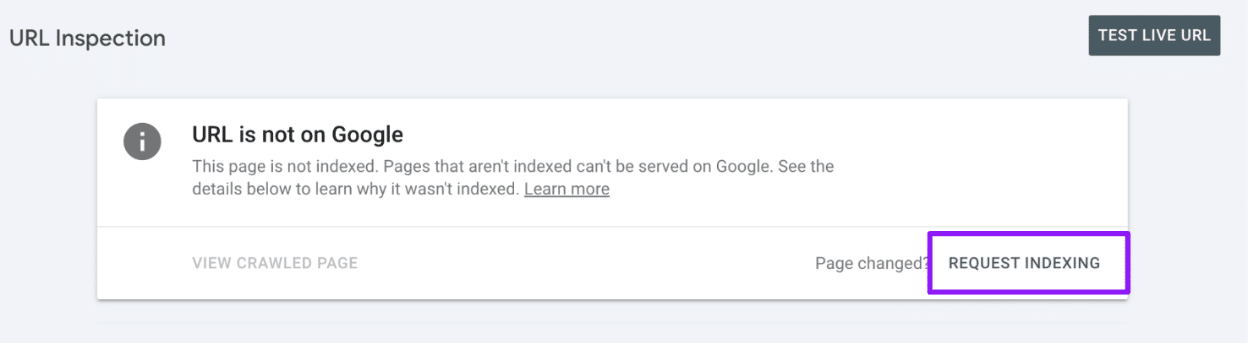

Step 9: Manually request indexing for hard-to-index pages

For stubborn URLs that remain non-indexed, manually submit them in Google Search Console.

However, keep in mind that there is a limit of 10 submissions per day, so use them wisely.

From my testing, manual submissions in Bing Webmaster Tools offer no significant advantage over submissions via the IndexNow API.

Therefore, it’s more efficient to use the API.

Maximize your site’s visibility in Google and Bing

If your content isn’t indexed, it’s invisible. Don’t let valuable pages sit in limbo.

Prioritize the steps relevant to your content type, take a proactive approach to indexing, and unlock the full potential of your content.

Dig deeper: Why 100% indexing isn’t possible, and why that’s OK

Recent Comments