How to get cited by ChatGPT: The content traits LLMs quote most

LLM-driven discovery is reshaping how readers find and evaluate information, yet most teams still don’t know what actually gets cited by ChatGPT.

While early theories suggest that structure, freshness, or authority signals are the primary drivers, the actual drivers have remained unclear.

To bring more clarity, I audited 15 domains in September across ecommerce, cybersecurity and tech, healthcare, data analytics, education, and local business.

Together, these sites generated nearly 2 million organic monthly sessions and 7,500 direct referral sessions from ChatGPT.

The analysis focused on blog posts – one of the most controllable levers for non-branded visibility.

By isolating generative referral traffic, the audit surfaced the on-page tactics most consistently linked to higher LLM citation rates.

Here’s what I found:

- Answer capsules were the single strongest commonality among posts receiving ChatGPT citations.

- Minimal linking inside the capsule text – especially omitting internal and external links – correlated with more ChatGPT referrals.

- Original or “owned” data ranked as the second-strongest differentiator for cited pages.

How the audit identified citation-driving traits

Across this dataset, the audit tracked patterns in structural and editorial traits, including:

- Headline format.

- Presence of an answer capsule.

- Link density.

- Use of original or branded data.

The traits that drove ChatGPT referral traffic proved remarkably consistent across industries.

The analysis focused exclusively on blog content indexed in Google and measurable in GA4.

For each of the 15 domains, all blog landing pages receiving ChatGPT referral sessions were extracted using UTM parameters, custom channel groupings, and referrer path segmentation.

Each page was manually reviewed for:

- Answer capsule presence and format consistency.

- Link density inside and in the immediate section following the capsule.

- Original data or “owned” insight inclusion.

- Last updated date and other traits.

By measuring these features against confirmed ChatGPT referrals, the study aimed to identify structural traits that make certain pages more “quotable” to LLMs.

Dig deeper: Tracking AI search citations: Who’s winning across 11 industries

Defining ‘answer capsule’

Before diving into the findings, it’s important to clarify how this analysis defines an “answer capsule.”

The term is still emerging in the industry and lacks a standardized definition, so the audit used a specific working set of parameters.

An answer capsule refers to a concise, self-contained explanation of roughly 120 to 150 characters (about 20 to 25 words) placed directly after a title or an H2 that is framed as a question-based query.

This character range was established by averaging the snippet lengths most frequently extracted by LLMs and AI Overview modules across comparable studies.

The goal was to capture a format that provides enough context for readers while remaining short enough to be parsed and cited in full by LLMs.

Determining ‘original data’ and ‘owned insights’

While answer capsules were the strongest citation predictor, pages incorporating original data or branded data consistently showed higher referral depth.

Original data refers to information that originates on the page itself:

- Unique survey findings.

- Performance benchmarks.

- The results of studies.

- Press releases.

- Proprietary metrics unavailable elsewhere.

Think “Based on our 2025 industry survey of 1,200 retailers…” rather than any of the general commentary or advice.

Owned insights, by contrast, may restate known information but are explicitly framed as the brand’s interpretation. These take forms such as:

- “Acme analytics tip 1: Segment your LTV cohorts by purchase channel.”

- “Acme recommendation: Prioritize capsule clarity over keyword density.”

This framing converts generic advice into a branded citation hook – a linguistic tag that may reinforce authorship and expertise.

And though these owned insights can be seen as a bit cheeky (taking ownership of common-sense advice), they do appear to hold some sway over which pages ChatGPT cites and directs traffic.

Get the newsletter search marketers rely on.

See terms.

How presence was evaluated

When auditing each page, any qualifying instance of an answer capsule, original data, or owned insight was marked as present.

If multiple examples appeared, the instance most consistent with the predefined parameters was used for classification.

Although there is always some flexibility in evaluating on-page strategies like these, the criteria align with long-standing SEO conventions, which made the assessment relatively clear-cut.

After evaluation, each post was coded across four binary variables to calculate relative frequencies and cross-interactions:

- Capsule or no capsule.

- Link or no link.

- Original data or none.

- Owned insight or none.

Dig deeper: AI Overview citations: Why they don’t drive clicks and what to do

The big picture: Answer capsules are the best avenue to get cited

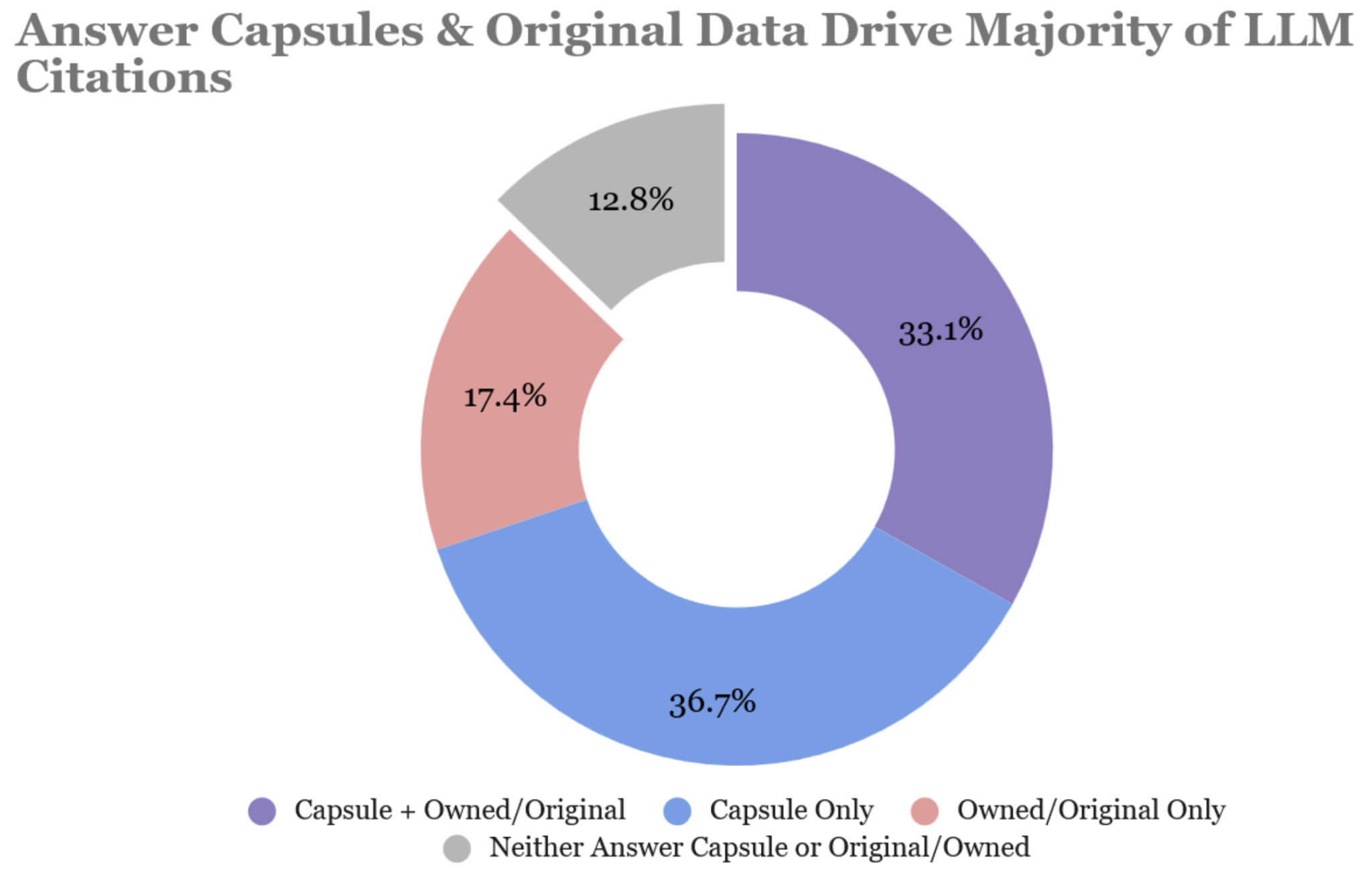

Across the full dataset, 72.4% of cited blog posts included an identifiable answer capsule.

Over half (52.2%) featured either original data or branded-owned insight.

When those traits overlapped, the pattern became even clearer:

- 34.3% of cited posts combined both an answer capsule and original or owned insight – the strongest-performing configuration.

- 38.0% included a capsule but no proprietary data, still performing significantly better than average.

- Just 13.2% of cited posts lacked both a capsule and any proprietary insight.

The conclusion is hard to miss: of our evaluated traits, answer capsules were the single most consistent predictor of ChatGPT citation.

Original and/or proprietary data amplifies the effect, but even without it, the presence of a clear, self-contained answer block dramatically increases a page’s odds of being referenced.

Link density inside answer capsules: A notable drag

SEO copywriting guidelines have long emphasized adding internal or external links to boost credibility and authority.

But this data shows content marketing teams must be cautious about where they place internal and external links.

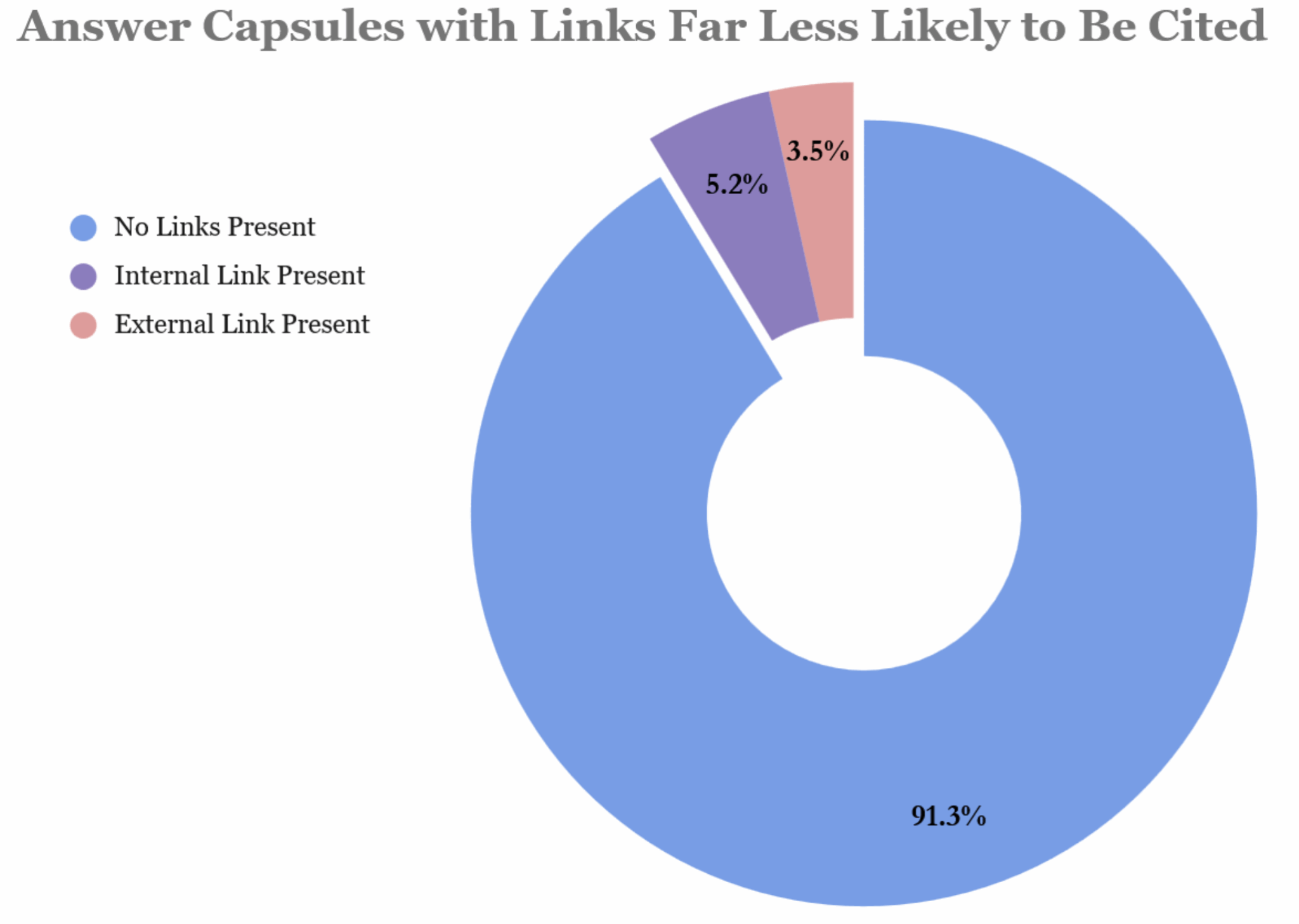

Among the blog posts in our dataset that contained answer capsules, the overwhelming majority of these capsules were completely link-free:

| Link Type | Share of Capsules |

| No links | ~91% |

| Internal only | ~5.2% |

| External only | ~3.5% |

| Both internal + external | <1% |

More than nine in ten capsules contained no links at all – a strikingly consistent pattern across industries and content types.

From an LLM’s perspective, a concise, self-contained block of text without hyperlinks appears to be easier to extract and attribute.

It reads as a standalone unit of knowledge rather than a navigational hub.

In other words, links may dilute quotability: they imply the most authoritative answer lies on a different webpage.

So, for human readers, these links are helpful.

For ChatGPT, they’re hesitation marks.

Dig deeper: Organizing content for AI search: A 3-level framework

What this means for SEO/GEO teams

Generative search has changed what it means to “rank.”

But the fundamentals haven’t disappeared.

Structured headings, clear formatting, and updated content still matter, but answer-centric design and authority signals now outweigh traditional keyword density or link tactics.

Immediate fixes for your top content

These quick adjustments can help your highest-traffic posts become more quotable to ChatGPT and other LLMs.

- Audit your top posts for capsules: Review your top 100 blog posts. If a clear answer capsule isn’t present, add one – ideally tied to a keyword research targeting low-difficulty, question-based queries.

- Inject proprietary insight where possible: Within existing capsules, weave in a unique stat, branded tip, or first-party data point. Even a small “owned” insight can dramatically boost ChatGPT citation potential.

- Keep capsules link-free: Our data suggests that you should place internal and external links below the capsule or in supporting paragraphs. It may be worth adjusting links in answer capsules even for older pages.

Ongoing strategies for new content production

Beyond quick fixes, teams should adjust their content workflows to align with how LLMs evaluate and cite information.

- Prepare (and train) your writers: Content teams should build blog briefs around an answer capsule “floor.” Any post that lacks one misses the most basic structural hook for ChatGPT citation. Train writers (and LLMs, if that’s how you’re producing first drafts) to craft short, high-confidence answer capsules that serve both readers and models.

- Infuse posts with brand insights or original data: A capsule is a great start, but if possible, consider adding a unique figure, first-party survey result, branded tip, or proprietary analysis to increase citation likelihood meaningfully. Include survey stats, benchmarks, or branded insights – anything that gives models a concrete reason to attribute the quote to you. This helps transform the post from a generic answer into an authority asset.

- Reconsider your linking strategies: Linking still matters. The key is placement, not absence. Keep capsules clean and self-contained, then use links in supporting paragraphs to build context and guide readers deeper. And, of course, remember every business and audience has different linking standards and UX needs, so treat this as a framework, not a rule. Prioritize clarity in the capsule and depth everywhere else.

Clarity still wins

Even with the recent changes, it appears the traits that once helped pages rank are now the same ones that help them get cited: clarity, structure, and authority.

For now, the answer capsule stands out as a primary choice for the algorithm’s preferred citation methods, especially when it’s paired with original or owned data to amplify trust and attribution.

But this means LLMs haven’t overturned SEO so much as refined it: they reward content that delivers a clear, quotable answer and backs it with genuine expertise.

The SEO-standard of answer-first, insight-driven writing now wins twice – once with search engines, and again with the generative models.

Dig deeper: Blogging, AI, and the SEO road ahead: Why clarity now decides who survives

Recent Comments