How to avoid marketing mix modeling mistakes that derail results

Marketing mix modeling (MMM) is having a moment in marketing measurement.

As privacy regulations limit user-level tracking, marketers are turning to it for reliable, cross-channel measurement. (We love it at my agency – MMM analyses often lead to smarter budget allocation with significant downstream impact.)

But as adoption grows, so do execution errors and misconceptions about what MMM can and can’t do.

Despite its strategic potential, it’s often misused, misinterpreted, or oversold – leading to costly mistakes and credibility loss from unrealistic expectations.

MMM isn’t a black box. To produce meaningful insights, it demands context, strategy, iteration, and strong data.

Context is especially critical. Without it, MMM becomes what I call a mathematical echo chamber – no external inputs and little connection to reality.

This article breaks down how to approach MMM correctly, avoid common pitfalls, and turn your analysis into real business value.

Execution errors

Too often, teams fixate on the modeling technique and overlook the broader system – data quality, assumptions, and stakeholder context.

There are plenty of possible mistakes, but the ones I see most often are:

- Using inconsistent, incomplete, or unvalidated spend and performance data.

- Assuming immediate or linear responses to media spend, which oversimplifies reality.

- Interpreting statistical relationships as proof of impact without experimentation.

- Using MMM for daily campaign decisions despite its strategic design and lagging granularity.

- Building models that are over-optimized in-sample but fail in the real world.

If you make any of these, your MMM efforts will be muddled and ineffective, and you will not get much buy-in for the initiative going forward.

Faulty expectations vs. reality

When run properly, MMM can offer highly valuable insights, but only within its appropriate use case.

With good modeling and inputs, you can:

- Reallocate budgets based on marginal ROI and saturation.

- Forecast sales impact from various budget scenarios.

- Set spending caps to avoid diminishing returns.

- Show long-term contributions of brand versus performance channels.

- Track media effectiveness over time and support cross-functional alignment.

What you cannot expect MMM to do:

- Optimize daily media buying decisions.

- Attribute at the user or creative level.

- Replace lift tests or experimentation (which are a necessary complement to MMM).

In other words, treat MMM as a strategic GPS that needs other inputs to work well, not a tactical turn-by-turn navigation tool.

Misreadings of output

You can give three marketers the same MMM output, and they might have three very different interpretations of what it means and what to do next.

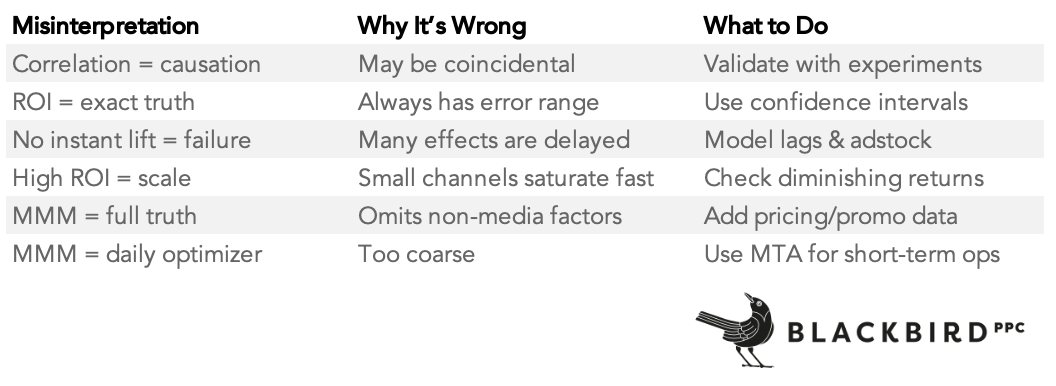

We’ve got a handy chart of the ways people misread the data (and how to fix those mistakes):

The misinterpretation I’d like to spend a bit of time on here is the correlation/causation dynamic.

Marketers need to understand that MMM is essentially a fancy correlation analysis that needs to be supplemented by incrementality testing, such as geo lift testing, to establish causation.

Dig deeper: Why incrementality is the only metric that proves marketing’s real impact

Get the newsletter search marketers rely on.

See terms.

What you need for effective MMM analysis

MMM does involve coding, but it’s a lot more than that.

It’s a cross-functional discipline involving data science, marketing, finance, and strategy.

To get it right, you need:

1. Clean, longitudinal data

One note before I dive into the data elements you need to run MMM: data density is critical.

For businesses without a huge pool of revenue-generating events (think of big SaaS platforms or car dealerships advertising online), use strategic proxy metrics that happen earlier in the purchase journey and provide strong predictors of revenue generation.

With that in mind, here’s the data needed (or recommended) for your model:

- Weekly data across 2–3 years.

- Media spend by channel and campaign. (Region is recommended.)

- Control variables (all recommended): Promos, pricing, and competitors.

- Note: seasonality is baked into the model for Meta’s Robyn, one of my favorite MMM options.

2. Advanced modeling techniques

- Adstock/lag functions to reflect delayed impact.

- Saturation models (e.g., Hill curves) for diminishing returns.

- Regularization or Bayesian priors to stabilize estimates.

3. Validation and iteration

Running an MMM analysis once and taking the results at face value is never going to get you the best possible insights.

If you’re serious about adopting MMM, prepare to include the following in your process:

- Cross-validation, holdout tests, geo-lift experiments.

- Regular re-runs (quarterly or biannually) to stay aligned with the market.

- Incorporation of other tools (e.g., MTA, A/B testing) for a full picture.

Dig deeper: MTA vs. MMM: Which marketing attribution model is right for you?

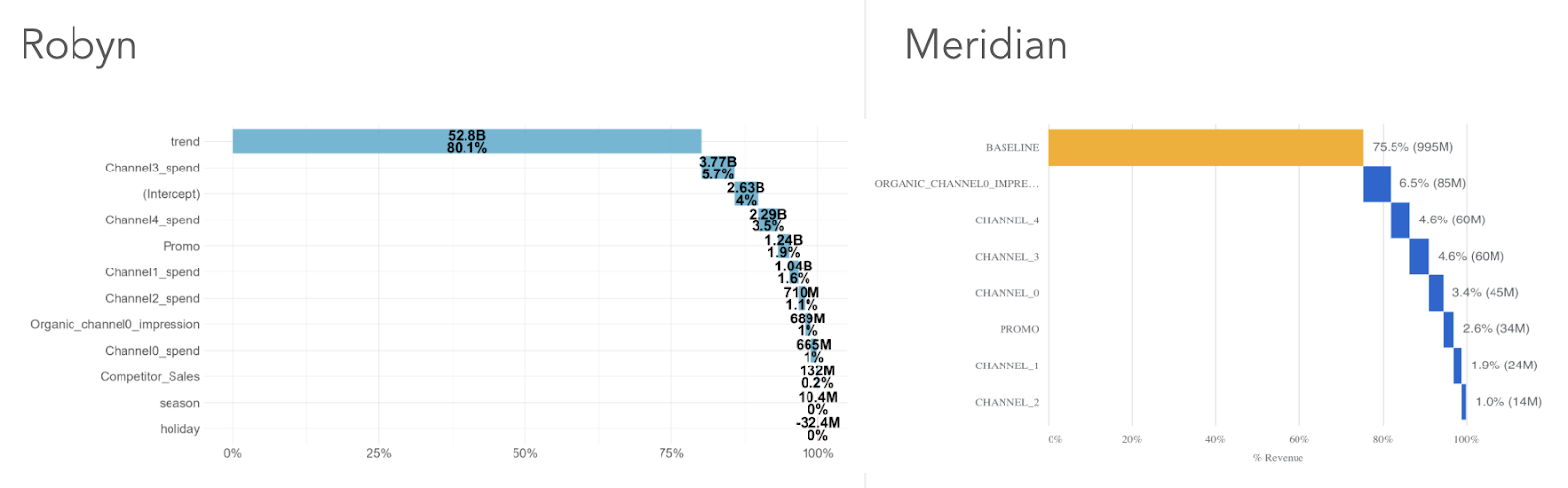

I highly recommend running analyses more than once and using different methods/platforms to identify commonalities and differences.

In the visual comparing Robyn and Meridian’s output from a recent client analysis, both models attributed similar influence across most channels – a good sign that helps validate the model.

But there’s a wrinkle: for channel 0, Meridian showed much higher organic influence and a slight bump in paid.

That suggests we need additional testing before moving to action items.

4. Stakeholder engagement

Even with top-tier MMM analyses, how you communicate the findings – and what they enable – is critical to getting buy-in from clients or management.

Before you start, align with stakeholders on KPIs, ROI definitions, and model assumptions to prevent surprises or misunderstandings later.

When you share results, include uncertainty ranges and clear action items that flow directly from your data.

If you can’t answer the inevitable “So what?” question, you’re not ready to present your findings.

Better MMM becomes a competitive edge

Overall, the shift away from user-based tracking is healthy for the marketing industry.

Initiatives like incrementality testing and MMM are finally getting their due as core parts of campaign analysis.

As major platforms level the optimization playing field with automation, running these analyses more effectively than your competitors is one way to drive differentiated growth.

Dig deeper: How to evolve your PPC measurement strategy for a privacy-first future

Recent Comments