Exploring the real risk AI brings to the internet (and SEO with it)

The idea of starting an article series about AI in a time when so many articles are being at least partially generated by AI and potentially primarily consumed by AI might cause one to pause and consider the value of the effort.

All of us digital marketers hope to find a future where we can still add value and find fulfillment in a world where our efforts and careers are increasingly at risk of being replaced by AI every day. Sometimes, it all feels a bit empty and potentially useless, but here we are.

In case it is not already obvious, I am a bit of a doomer when it comes to AI. So inevitably, I have labored over this article like a true human, almost exactly like I have done before, in anything else I have previously published. But I still do have some pretty existential disquiet on this topic, so this AI-themed article series will begin with an overview of my biggest apprehensions, and then move into ideas about how our industry might address them, and by society at large in the later articles.

For this article, let’s begin with the “Dead Internet Theory” and what I think it means for us. We will discuss the topic in the context of Google’s own AI evolution; Then, with that in mind, we will also look into how Google’s stance on the use of AI will impact our jobs as SEOs. Then, bringing it home to the core goals of marketing, we will discuss how AI filtering and bias could impact consumers and what we can do about all of it.

The disruption is real: The Dead Internet Theory

In general, my trepidation is somewhat aligned with a frame of thinking called “Dead Internet Theory,” which basically just describes a series of somewhat probable occurrences in which the internet becomes (or has already become) functionally unusable, because it is overrun with bots talking to bots, and bots consuming content created by other bots.

In this dystopian scenario, real human content, conversations, and interactions get de-prioritized or drowned out in favor of the more engaged and productive bots. While some people believe that this is already the status quo, I don’t think we are there yet. But I do see it as a risk in the future—especially in terms of how companies will be able to compete in rankings and track success when everything is AI-influenced and personalized.

You should know that I am not alone in my concern. Even leaders like Sam Altman acknowledge the risks—though he prefers to focus on AI’s potential. I am concerned that all the changes will be disruptive and may make some jobs obsolete. I also worry that until there are meaningful consumer protections and regulations, the overall impact of AI in the digital marketplace will be hard to predict and respond to.

Google’s changing stance on AI content & how it could impact SEO strategy

Obviously, the biggest concern for digital marketers and especially SEOs is the risk of increased competition from spammy or even non-spammy AI-generated content.

Google has gone back and forth about their instructions on how and when AI can be used to assist or fully create content. Most recently, Google clarified that they do still expect all content to at least be human reviewed before it is published online for them to crawl and index—a standard that they don’t manage to keep up with in AI Overviews.

Since there are no 100% methods for identifying when and how AI has been used to create content, it seems obvious and inevitable that many publishers and websites in general will be using AI to help enhance and speed up the creation of content for their brands.

This could be good news for content creators and managers, who now have AI available to help generate content at scale and minimal cost. It is less good for their competition and for the search engines that have to ceaselessly crawl, render, evaluate, index, and rank the content that can now be generated at scale, all the time, on any topic, in any language—or potentially in all the languages at once.

Likely, a lot of the content will be repetitive and not add a lot of value, but Google can’t know for sure without crawling it. This becomes a bigger and bigger task, and thus, we should all expect Google to continue to become more and more stringent about what content they will allow into their index and what they will not.

With the potential for unlimited AI content generation, paired up with social media content, content creators who want to maintain all human creation methodologies without the use of AI may be drowned out. This is especially true when you consider the simplified access to APIs such as the newly released Google Trends API, to help generate and manage content ideas and calendars. If the AI-generated or AI-assisted content is consistently just as good as human-created content and/or indistinguishable from it, it could decimate the traditionally created, human content.

Google itself seems to be surprisingly unconcerned about this potential, so maybe they have plans we don’t know about, that they believe are protective. Maybe they will do more to leverage local compute power on consumer devices when Gemini is baked in for all users, and that will help cover the needs; or maybe if they become the main publisher, or “re-publisher,” on the internet, deep indexing will become distinctly more optional, and they will be able to save on compute power there.

So far, their lack of concern is confusing.

Google’s potential AI path towards self-reinforcing cycles of dissatisfaction

My larger concern is about all this AI-created and assisted content, and further, the risk of content being lifted and not attributed by Google. This will diminish the incentive creators have to create new and unique content, and that, in turn, will lead to a lack of diversity and critical thinking in all content that is available for consumption.

If this goes on long enough without external forces acting on it, I’m concerned that it will become a self-reinforcing cycle—especially when creators think their consumers can’t tell the difference between real, authentically created content and AI content.

We all like to think that we can tell when something is AI written or generated, including video and audio. Unfortunately, this is not the case; a lot of tests of the most recent AI models show that many people can be tricked by AI. After users find out that they have been tricked by AI a few times, their brain will start to prioritize safety over reality—and likely assume inauthenticity in most instances.

But rather than the safety of this assumption making us happy, the monotony and sameness will be vapid and unfulfilling. Both creators and consumers will find less of what they really want. It is a bit like comparing “fast fashion” to a bespoke, hand-tailored wardrobe; the cost is down and the quantity is up, but the skill needed and value created is compromised.

Beyond feeling less happy and fulfilled, the lack of humanity in the content will eventually lead to an overall diminishing of the feelings of trust that people have for content across the internet, which already wasn’t great. Add in the idea that video and audio content creators can train AI systems on their voice and video likeness and products, and this problem gets even bigger and more concerning. At some point, we will be forced to ask ourselves: “Are any video reviews or testimonials real?” How will we know?

When AI bolsters conformity over creativity

Consumers also risk weakening the internet’s social fabric when we over-rely on AI.

If users are generally tuned out because they are not sure that they are consuming genuine and authentic content, then it could encourage them to be less discerning rather than more. With so much potential content to consume, they may give up looking for authentic or unique content and just settle for the quickest answer that they can find. The content that is the safest and widely accepted will drown out unique or edgy alternatives.

They will elevate content with minimal detail and nuance to shorten the amount of time spent wondering if something is authentic—natural discomfort avoidance.

This natural human tendency to seek shortcuts will be exacerbated by the higher degree of personalization that AI search engines and chat utilities will have. When we allow the AI systems that we use to learn about the world around us and to pre-screen everything that we see, we introduce more bias to an already fractured society and diminish the possibility of mutual understanding.

The idea that we might want to limit the sources of information may seem like a great way to simplify the barrage of news and information; filters could helpfully limit the amount of information that we are exposed to in a day and control the growing mountain of content that will exist on the internet.

It sounds like a delightful respite from the information overload that we all already experience, but there are larger risks to consider here. When we allow AI algorithms to filter the information or sources of information to exactly what we prefer, we may never even be exposed to new content, ideas, or brands. We may not even know that they exist.

Previously, at least Google users could assume that they were being shown content that was fairly evaluated, based on normal ranking factors that are not related to our own filter-bubble preferences. Now, users can override that more balanced option, and intentionally limit their exposure to different ideas—Not ideal in what is normally considered a digital marketplace of ideas.

In this scenario, if and when we are exposed to new information that is not part of our normal preferences, it will likely be shown lower or less frequently than the content that the AI normally chooses for us, putting it at a perceptual disadvantage, even though the lower ranking and less prominence is representative of an algorithmic decision or disadvantage, not anything that is representative of a true and honest comparison.

Search engine AI features put unbiased information at risk

Less visibility to new and novel information would enhance already existing bias and the filter-bubbles that people live in and cause a wide range of real-world consequences that reach far outside of digital marketing and SEO.

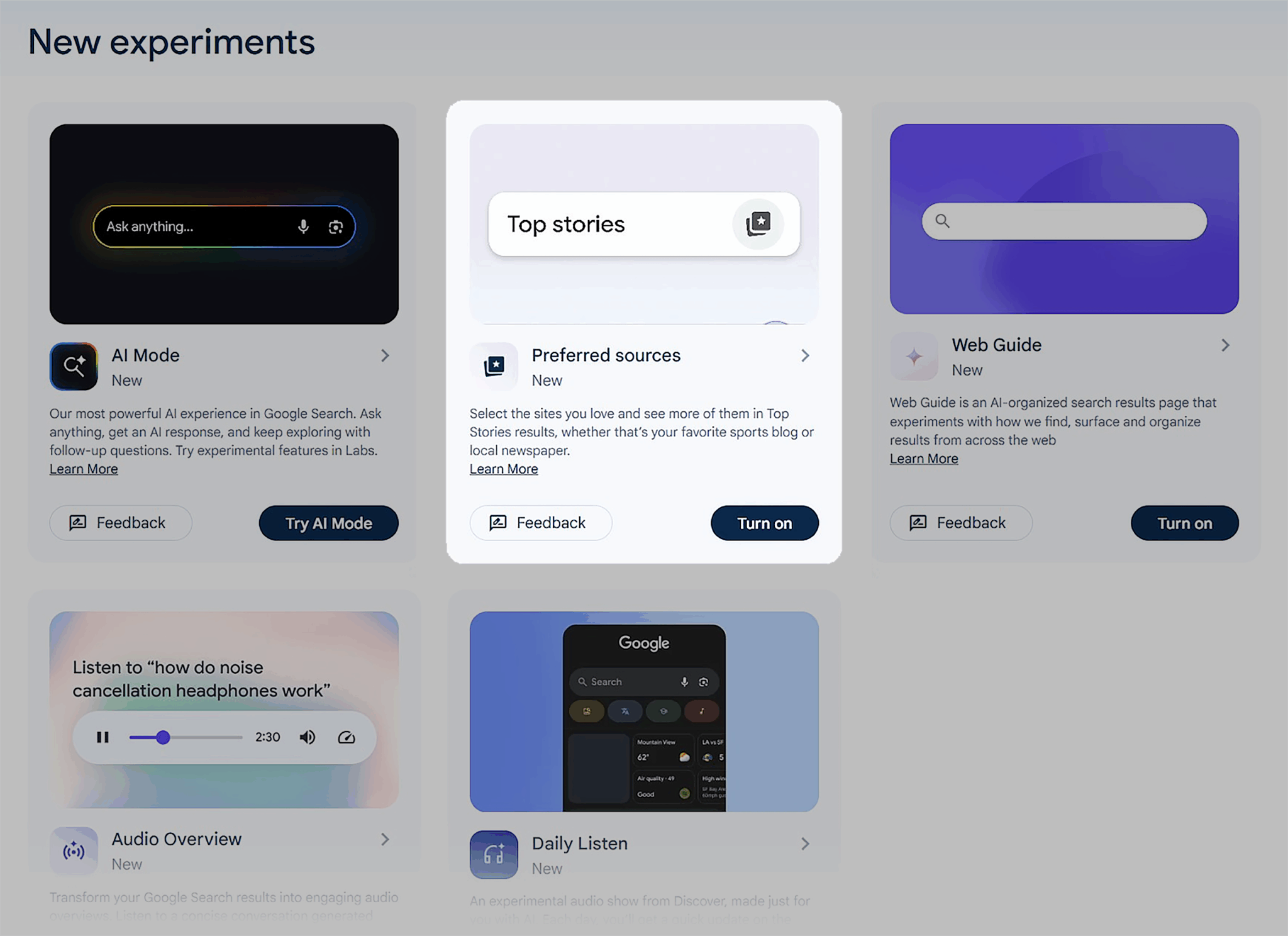

Beyond that, the most concerning thing about bias is that we will always forget that we have it, even if we set it up in our preferences, like Google is allowing in its new Google Labs experiment, shown below. As shown below, in Google Labs “New Experiments” screen, searchers can now test setting “Preferred Sources” for your Top Stories, and the AI systems will start reorganizing your news search results to prefer your selected sources.

Some people will still consciously seek out other information and sources, even after they have set up these preferences, but most people will forget and just move on with life; they will assume that everyone else is exposed to the same information and news stories as they are. Normal people will have no idea why other people have such different interpretations and impressions of reality than they do.

The unfortunate answer is that the differences may have been caused by a casual or even errant click that triggered the algorithm to send one person down a different path than the other. After that, the ideas and information that they were provided were unnaturally reinforced, and the two people doubled down on different beliefs, because of algorithmic reinforcement rather than choice or disagreement.

None of this is entirely new; when the internet first became widely available, teachers and parents worried that their children would lose their ability to do math when calculators became widely available or lose their ability to spell when word processors added the ability to spell-check documents.

The truth is, all of those things did actually happen; most people are worse spellers, and worse at doing math without calculators. The only consolation is that the impact of those skill losses was actually not that bad. Those skills were replaced with other skills. Maybe the skills were even upgraded, with manual tasks being replaced with more complex and useful tasks, because less slow, manual effort was required. Will the same be true with AI?

As SEOs, we should remember that people are craving authenticity and connection, and LLMs are looking for that too. That means that content that is both accurate and fair will hopefully be rewarded with visibility, citations, and engagement. We can already see this with the high preference that many language models have for forum content; they are looking for real human interaction that represents real experiences and multiple perspectives. Knowing this can help guide you to SEO strategies. New strategies could focus on forums or other interactive, community-oriented content.

Where does this leave SEO?

When AI is both our competition and our competitive advantage, there is a risk that only the AI company wins—whether it is Google, ChatGPT, Claude or something else, they are the ones who will scrape all the information and present it—hopefully with a link, but maybe not. The more content we and our competitors create, the more the AI language models will be able to crawl and learn about new topics; our hard work becomes their consistently free resource for new information.

When the coverage of a topic is the same from multiple sources, and provides similar facts or ideas, the language models can trust that there is a consensus about the topic, and then simply choose who they give the citation to. This ability to choose who is cited and who is not gives any LLM a strong subversive ability to “call balls and strikes” in a way that could be incorrect, biased, and/or lacking in transparency.

We take it for granted that search engines are presenting information in a fair way that is not influenced by money or private deals, and we simply assume that the LLMs are the same; what if they are not? What if they are influenced by money or secret back-end deals? Would we even know? As SEOs, we need to move into the future with our eyes wide open to the risks, with a deep knowledge of how AI has reshaped SEO. Savvy practitioners will not be using wildly different SEO strategies, but instead will re-focus known strategies to attend to the new needs of the AI tools.

Similarly, when Google writes the rules about how we can and can’t use AI to rank in search results, but they are not expected to follow those same rules when generating the AI Overviews that appear at the top of the search results, they create a structural disadvantage for specializing in organic search. If Google’s goal is to always provide an AI Overview that they can monetize with ads, the changes in traffic referral patterns may prompt experts to shift their specialization to something more predictable, such as PPC. (This topic will be explored more in the next article in this series.)

Even if we are not enticed to switch specialties, organic marketers must be vigilant. For years, we have assumed that Google presented a fair and balanced representation of the information on the internet and that it prioritized the importance of balancing organic results with its paid counterparts. With all the shifting ground around search and AI, as both a content resource and a ranking and referral channel, there is a shifting power balance in the marketplace of ideas that we are used to. We shouldn’t simply assume that the same rules and norms are in play, whether with Google or any other AI or LLM resource we choose to use for creating or surfacing information.

Recent Comments