What is the inevitable evolution of LLMs and search?

It’s clear to me that the current “LLM situation” is untenable. These platforms are offering a very expensive set of products with relatively unlimited access. At the same time, you have content creators and publishers in a state of panic as decreased traffic has become the norm.

Add to this that platforms ranging from Google’s AI Mode to ChatGPT lack clarity on how to monetize their products, and it becomes clear we’re kicking a can down the line, and sooner or later, all bills come due.

The LLMs providing such broad access at no cost are unsustainable long-term.

Content publishers offering their content to LLMs for free without traffic or other compensation in return is also unsustainable.

It almost feels like a damned if you do, damned if you don’t moment. Those sorts of realities, while painful, encourage (if not demand) movement towards resolution.

So, how does this all play out?

Market changes are coming, and, in my honest opinion, your marketing and SEO strategies are going to depend on it. But before we get to where we are now and how the LLM scenario will inevitably change, we first have to understand how we got here: Stock valuation (sounds boring, but I promise it’s not).

How we got here (Why an LLM marketing correction is inevitable)

The biggest “LLM event” with the greatest amount of impact for marketers wasn’t the launch of ChatGPT or Google Gemini. It was the announcement of Bing’s AI chat experience and its integration into Bing Search.

Sounds peculiar.

However, without this event, the AI wars between Microsoft, Google, and others would not have played out the way they did.

How did things play out? We’re at the point where Google created two all but identical products that must cost a fortune to run. Without Bing’s big announcement, I’m not sure we’d ever have gotten to this level of dysfunction and demand for a market correction.

Could you imagine telling your boss (or conversely, your employees) you want to build two parallel products that basically do the same thing and cost a ton of money to operate, yet you don’t want to charge for it, nor do you have a plan to monetize it? Well, that’s exactly what happened because of Bing beating Google to the punch.

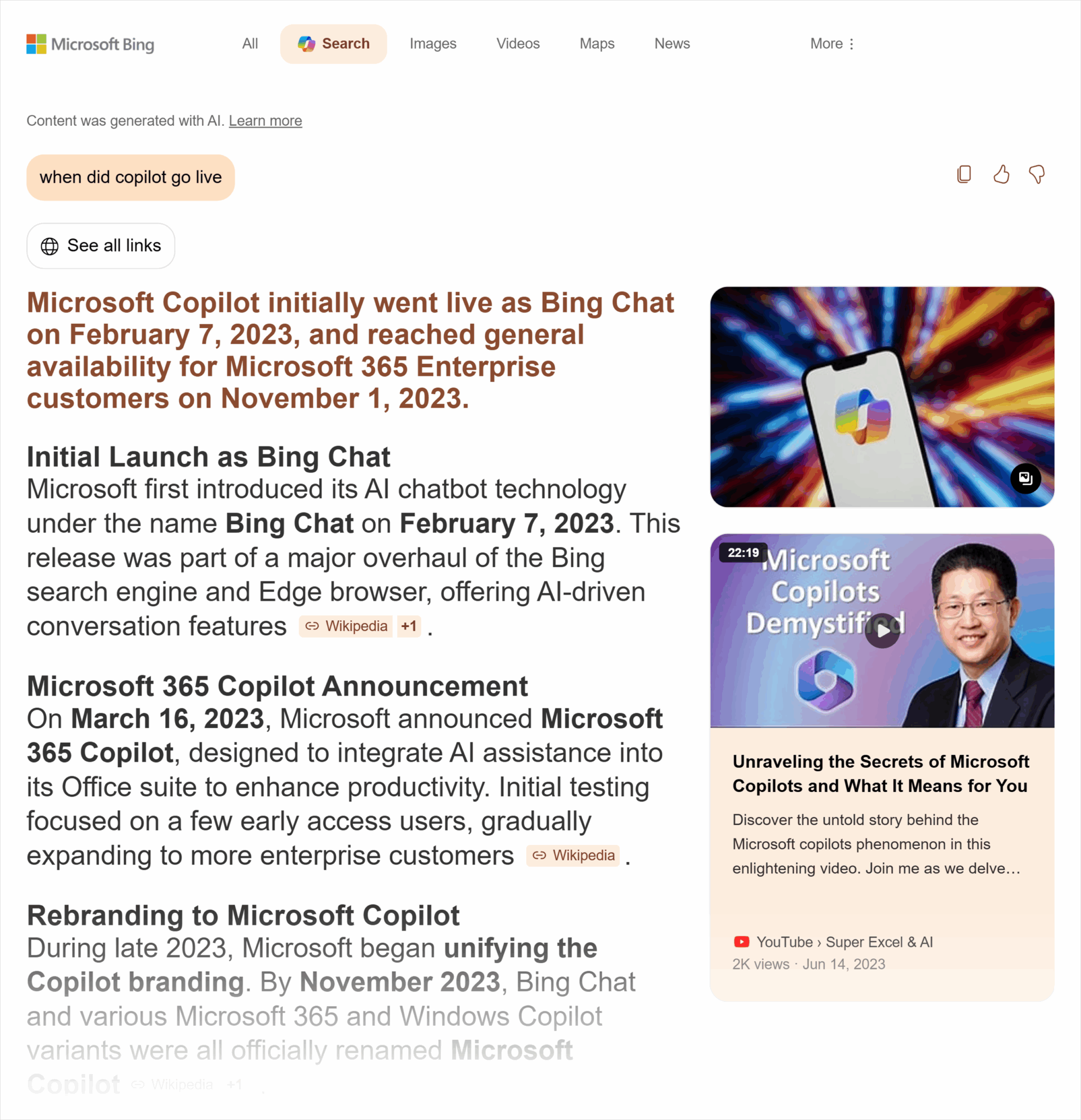

Bing’s February 2023 release of what would eventually be called “Copilot” beat Google to the “LLM in search” punch. While Google had announced “Bard” a day before Bing’s conference, Bing was the first to place the AI chat element into their SERP. (Albeit it only worked in Edge, so who was really using it?)

From that day on (literally), it was a modern-day space race. Not wanting to be overshadowed, Google scrambled and announced “Live at Paris” for February 8, 2023. This, Google felt, would be the moment they reclaimed the narrative from an “unworthy” Microsoft.

Sidebar: Talk about not being able to handle your competition’s success. Bing was king of the hill for an entire day before Google scrambled to get Live from Paris on the books. As Frankie Goes to Hollywood said, “relax.”

Sidebar to the sidebar: The inability of these companies to “relax” and act strategically has been their undoing to no small extent .

The feedback on Google’s Paris event was scathing. Mashable asked, “Hey, Google, are you OK?” VentureBeat described Google’s response to Bing as “muted,” with TechRadar stating, “Clearly, Microsoft has pushed Google into making Bard public a little earlier than it’s comfortable with.”

TechRadar was spot on, and none of us could have predicted the absolute product development and marketing madness that was to ensue.

The consequence of Bing’s win and Google’s snafu is that everything from that point onward was about public opinion (which means everything became about immediate reaction without genuinely considering the long-term consequences). Each of the LLM players had to start thinking about how they were perceived lest they feel the wrath of the investors.

That’s a very important point to understand.

What happened after February 2023 has had, in this author’s opinion, very little to do with developing innovative technologies to improve the web and a lot to do with developing innovative technologies to improve stock prices.

The problem is that this kind of thinking inevitably leads to market dysfunction (as we are experiencing now), followed by market correction (which we have yet to address).

Before I address how I think the correction will play itself out, let’s first explore the great AI stock wars in a bit more detail. Understanding how and why things have played themselves out as they have is equally as important as understanding where things are currently. Also, without full context, we can’t really determine the inevitable consequences and corrections that are yet to come.

So Google is focused on stock growth, first and foremost. Why is that so bad? After all, they are a publicly traded company.

There are a million ways to understand this question, but I want to focus on what it means for the wider digital marketing community.

Google has been sending mixed messages and is seemingly operating in a confused state. Yet, we’re left trying to act. Do we act now based on how Google has constructed the AI ecosystem, or do we wait to see how things settle in the end? How can we even predict how they will settle in the end?

Let’s start breaking this down so you have greater clarity.

Find Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Google’s march to LLM madness

Google’s Paris 2023 event was really the first time we ever saw Google’s vulnerability as a brand. It was the first time I can recall people legitimately wondering if Google was a brand on the decline and if it would fail to live up to the moment.

For a company, that’s a nightmare scenario. After the PR nightmare that was the Paris event, Google had one last shot to restore user confidence and industry supremacy: Google I/O 2023—which was set long in advance for May.

Google’s annual conference for developers and software engineers is an important event where major products are announced. The outcome of this event can have a huge impact on how Google is perceived by the overall market and investors.

In May 2023, weeks after its February snafu, Google tried to regain lost ground when it announced its “search generative experience” (SGE) would be available in “Google Search Labs” to US users.

In simple terms, Google announced its version of what Microsoft previously announced in February. As with Bing, Google would limit who could access it (mainly because the product wasn’t ready and was only announced to keep up with Bing). Only the US and those who voluntarily signed up to experiment with the SGE product via Search Labs would have access.

Why?

Because the product was not ready for release. Looking back now, it was a crude version of AI Overviews (AIO), which is a crude version of AI Mode. This was the first instance of a pattern of behavior that saw Google developing and releasing products in order to maintain its place in the market.

Think of what happened in terms of stock valuation. What did Google need to do if it wanted to maintain its stock valuation after Microsoft beat it to the punch, and after Google’s own initial response was perceived as weak? It would have to offer an actual product people could use, as Bing had done. It would have to announce that its version of AI in search was rolling out—whether it was ready or not. (Hint: It was not.)

Which is exactly what they did. They offered an extremely limited number of users access to a product that not only wasn’t ready, but didn’t even have a final name yet. (Search Generative Experience…now that’s a name I haven’t heard in a long time.)

Google didn’t make SGE accessible in labs because it wanted to. It had to in order to be seen as keeping pace. For Google, it was the smart move. For users and digital marketers, it was kind of a mess. It brought up a lot of questions about the long-term sustainability of a web driven by LLMs and what it meant for everything from traffic to conversions to whatever performance metric you prefer.

Prior to I/O 2023, Google’s stock value was hovering around $105-$108 per share. After I/O 2023? A new price trend between $115-$125 per share emerged.

Success.

It’s hard to have any sort of market clarity when the main player is developing and releasing a product that’s more about optics and stock valuation than about moving user needs forward.

That leaves digital marketers in a very awkward spot.

Fast forward to Google I/O 2024. The AI hype had only grown over the course of the year, and Google had learned its lesson. It was not going to fall behind; it would keep announcing the next evolution of AI in search.

Whether its product was ready or worthy wasn’t the question. Perception was everything. In order to keep stock valuation in line with the power of AI hype, Google would have to regularly announce something significant about AI in search or risk stagnation (if not worse).

Google would not let February 2023 repeat itself. (Think of that month as a period of trauma that irrationally drove Google’s actions. Of course, that’s my opinion.)

Thus, it was announced at Google I/O 2024 that AI Overviews (formerly SGE) would be open to all US users, as well as other countries as time went on. (Over the course of 2023 and 2024, Google had evolved its SGE experience and renamed it “AI Overviews.”)

LLMs on the Google SERP were here, and within a month, the stock price jumped from $168 per share to $177 per share, eventually hitting $186 before falling back down.

Let’s recap:

- We have Google first trying to keep pace with Bing by announcing a limited integration of LLMs into search (2023).

- Google then expands on this limited integration, and brings LLMs to the SERP in a real way (2024).

In two years’ time, Google showed the market that it hadn’t lost its touch and was a real player in the LLM space.

Enter 2025. And if you’re not convinced that any of Google’s major LLM announcements for the SERP have little to do with us as marketers or people as users, then check out what happened next: Google announces AI Mode is live for all US users.

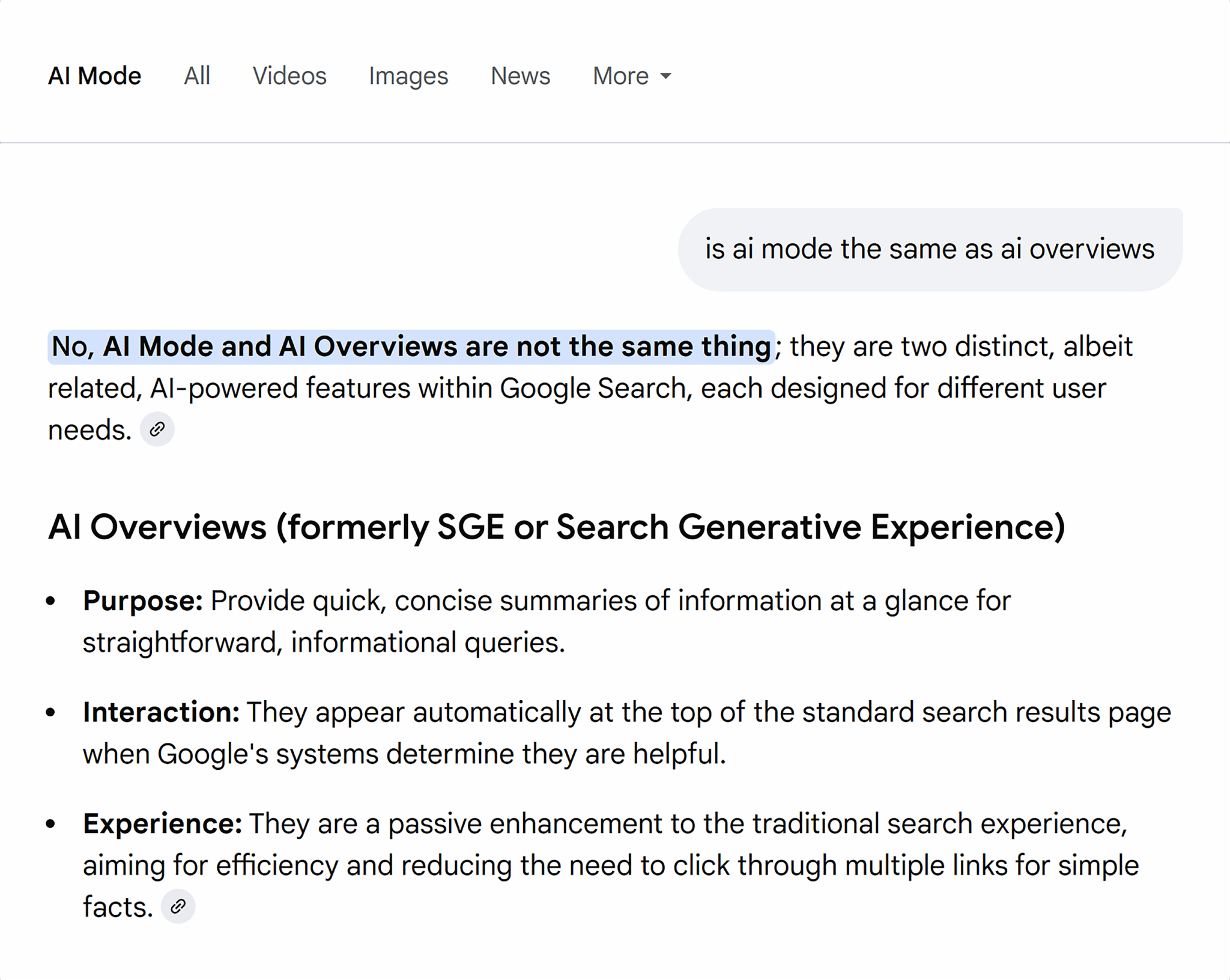

Google (as I briefly mentioned earlier) had been building a second LLM called AI Mode. Initially, it was, like AIOs, limited to US users who signed up for it in Search Labs. However, at I/O 2025, Google announced AI Mode was open to all US users at I/O 2025.

In other words, as of the writing of this article, Google is running two incredibly similar products (AIOs and AI Mode) that are very costly and has no real plan to monetize them.

In fact, it’s pretty clear that AI Mode is the superior choice between the two. AIOs literally serve as a gateway to AI Mode.

Moreover, AI Mode is more of a one-to-one competitor to ChatGPT. It’s also a better product with fewer of the gaffes AIOs have become infamous for.

It’s not a secret that AI Mode is going to replace AIOs at some point. Google’s Head of Search, Liz Reid, was quoted as saying, “This is the future of Google search, a search that goes beyond information to intelligence.”

How did we get here?

We got here because of what we already discussed above: Google needed to maintain a certain level of perception in the race to LLMs on the SERP, and it developed a product that wasn’t really capable of doing that in order to drive stock valuation instead of user satisfaction.

Concurrent to spending time and resources on AIOs, Google was also developing a more substantial and viable product (AI Mode). The latter still isn’t ready for integration into the main search results (we’ll touch on why later).

Now we’re dealing with a situation where we, as marketers, are trying to find some sort of construct to work from, but that construct is incredibly volatile and unpredictable. To put it bluntly: Who builds two of the same tools for the same audience with the same intent behind them, and how predictable is anyone who does?

To make matters worse, Google’s latest positioning for the market only adds more confusion to the equation. Remember, Google has to address the market’s next logical question about Google’s “AI in search viability” (in the form of product announcements).

The next question the market needs an answer to regards long-term viability (i.e., monetization). Are these LLM products actually a sustainable driver of revenue growth?

Thus, at I/O 2025, Google CEO Sundar Pichai talked about how they are lowering the costs of AI outputs.

He wasn’t talking to us, however. He wasn’t even talking to environmentalists. He was talking to investors who want to know how all of this shakes out long term.

With the launch and popularity of LLMs across the board, the question becomes about profitability. Part of the uncertainty is the cost to run these LLMs. Which is why Sundar was talking about bringing down the costs, as Google must start addressing cash flow and profitability.

How do I know that?

Simple. They talked a ton about adding ads to both AIOs and AI Mode at the same event.

However, none of the announcements about ad placements in Google’s LLM properties had much, if any, substance.

For starters, advertisers don’t even know if the click they receive came from an AI property or from within the traditional results. (Yes, as of the time of this writing, people paying Google to run ads do not know if the clicks that cost them money are from AI Mode or AIOs or just from traditional placement within the search results.)

That’s a telltale sign that the clicks are not coming from AI Mode or AIOs (because if they were, Google would be shouting it from the rooftops and showing off data to back it up).

Moreover, Google made all sorts of confusing announcements about ads appearing above, below, and within AIOs. In other words, it appeared as though Google was trying to purposefully muddy the ad click waters by saying it would place ads above, below, and within the AIOs. And again, there is no data advertisers have access to that would indicate where the clicks came from.

Google’s AI properties not being a potent source of PPC clicks aligns with what has become known as the “Great Decoupling” of clicks and impressions on the organic side. (We don’t have direct data on this because Google refused to segment AIOs in Search Console—another tell-tale sign.)

My thinking is, if people are not clicking on the organic citations in Google’s AI properties, they are certainly not clicking on the ads (which have a significantly lower CTR than organic results historically).

Google’s announcement of ad placement within AIOs and AI Mode at I/O 2025 was a red herring.

Essentially, we have Google announcing ads that it knows no one will click on and that are not a sustainable form of advertising, and therefore revenue. But reality doesn’t matter here—perception does.

Google isn’t talking about ads in AI Mode and AIOs because it has a plan to monetize them in this way. It’s talking about monetization because it has to in order to keep up with the answers investors want. Thus, Google announced monetization to keep the stock hype real, when in reality, the program they announced would be completely ineffective at driving revenue.

Even if Google had a genuine ad revenue plan for AI Mode and AIOs, they can’t implement it right now. If they do, folks will likely flock to ChatGPT instead. AI Mode has to both establish itself more and be substantially better than ChatGPT before Google can implement any monetization plan.

Google is stuck between trying to monetize to keep the stock valuation up, but also not monetizing to keep the stock valuation up. It’s certainly a weird place to be.

And so, how are we supposed to create a strategy to approach LLMs if Google itself is behaving irrationally? (Rationally, from our point of view, from their immediate goals, it all makes sense.)

My answer is to look at the inevitable market corrections that are yet to come.

Now that we better understand Google’s behavior…now that we can separate what’s real from optics…now that we can distinguish between what might be a legitimate AI product within the Google ecosystem and what’s there for stock valuation…now we can start to understand the consequences of it all—and that’s a place we can act from.

The market corrections coming to LLMs

As I said at the outset, the whole situation regarding LLMs in search isn’t sustainable in its current form. I think now that we have more context, it’s easier to see why that is.

I’m a big believer that if something feels like it will inevitably hit the fan, it will. It’s a matter of when, not if.

Here’s how I see it playing out, and, consequently, what I think it means for developing a strategy to approach LLMs in search. Let’s start with the first AI market correction:

AI hype will gradually decrease: Everything I’ve said here is predicated on the AI hype cycle. If people of all sorts were not hyped about AI, none of this would be an issue. Google can’t drive up its stock valuation if people in general aren’t very excited about AI technology. The increase in stock valuation is only possible as investors see how hungry humanity seems to be for AI technology.

This dynamic can’t—and won’t—last forever. The truth is, we’ve already started to move past it (which is exactly why Google is starting to talk about cost reduction and monetization—more on that soon). It’s possible that generative AI is slowly becoming second-fiddle in the hype machine to agentic AI.

But none of these hype cycles will last forever (and my digital marketing veterans know this all too well).

The reason why the hype machine won’t last is that generative AI and LLMs are not always great. Sometimes they can be fantastic, other times less so. They can save time on certain tasks, but not others.

Put simply, AI isn’t a panacea. It’s a fabulous technology, but not a cure-all.

Meaning the hype and the reality don’t align.

I think it’s been one of the best-kept secrets in all of marketing, but large enterprise-level teams have had huge amounts of skepticism about generative AI from the get-go. They just don’t talk about it a lot because these brands don’t want to be seen as going against the grain. (They also aren’t about to tell you their strategies.) But these teams have not adopted the technology in the same way “the rest of us” have.

The skepticism seen at the enterprise level is rooted in reality. These enterprise teams aren’t naysayers; they just don’t think using generative AI to the extent we do would allow them to create a consistently quality product, which would hurt their bottom line.

That right there is the unsaid truth. AI is amazing, but it’s not always great for our bottom line. It can do some incredible things, but a lot of what generative AI and LLMs output is inaccurate, low-quality, and a shell of what an actual “quality output” would be.

Generative AI providers are not unaware of this. I had a client in the space, and the entire reason they hired me was to help pivot their brand positioning because they realized a lot of what is being offered and said to users is overpromising.

To think that people won’t catch on and adjust is fantastical. It’s already happening. I would love for it to happen because people realized the big tech companies are manipulating perception for the sake of stock valuations, but that’s being too idealistic.

Instead, people are just now starting to catch on and admit that generative AI has serious flaws. Workplaces are providing training about the limitations of AI and what to watch for in the output. All of that chatter and conversation is compounding at the moment.

As I’ve said elsewhere, my wife is a great example of this. She’s a nurse manager, and she was required to take a course about “AI.” She came home shocked at the level of inaccuracies and what people are using AI for. (You can likely imagine why a healthcare professional would be required to take such a course.)

As more of the population becomes increasingly skeptical about LLM output and generative AI as a whole, the power of big tech to leverage its AI development for stock valuation diminishes.

The first—and the more powerful market correction—is that people will no longer feel as hyped about AI, nor be as easily manipulated by AI hype.

This leads us directly to the second AI market correction that is going to happen:

AI hype will no longer drive increased stock valuation: Once the hype around AI finally starts to decrease, the ability of Google to drive stock valuations with announcements like, “AI Mode is Search Labs for the US, and only the US,” won’t be possible anymore (or will at least be significantly less impactful).

The incredible impact AI tech development has on stock valuation directly depends on the hype. If the hype wanes, investor outlook on the tech will also wane. When that happens, Google will have to focus on more of a cashflow-first profitability model.

If the stock valuation can’t cover the extreme cost of business (which for Google is double since it’s running a duplicate product), then it becomes necessary to move to a profitability model.

That’s when we’ll finally see Google figure out how to monetize its LLM in search. Personally, I expect it will work like a YouTube ad. You’ll get 10 free prompts per day, and before you get five more free prompts, you’ll have to sit through some sort of interstitial ad.

The only reason we currently have so much free access to such a costly product is that Google and ChatGPT are fighting for dominance. I suspect this is going to be harder for ChatGPT to win, as it has griped about how much users saying “please” and “thank you” in their prompts costs. Google’s got better product integration and a pretty big pocketbook by comparison.

However the corporate chess ends up playing out, the reality is still the reality: As the hype wanes, the LLMs are going to have to shift financial gears. (Which you can tell that Google already realizes from all their talk about ads in AI Mode and AIOs.)

Now we come to the final market correction, the content correction:

The content in LLMs will start encouraging exploration: As dumping out more and more responses becomes unsustainably expensive, the content within LLMs will change as well. Google is already onto this. (They’re very good at seeing what’s coming.) As dissonant as their product development strategy has been, Google has always shown itself to be very adept at understanding what people want.

Once you’re operating within a profitability system, your entire way of thinking changes. You go from spending like a teenager at the mall to being middle-aged and with a mortgage faster than you can say “home equity loan.”

At the moment, LLMs are built within the context of free money. The mindset is to spit out as much free access as is necessary to acquire users, round up investment, or increase the stock valuation. But as with every bubble, this too will burst.

When it does, these platforms will suddenly start considering what people really want from an LLM in totality. (I think they currently look at one slice of user demands at a time, but lack total unification.) On a dime, Google will stop talking about how everyone loves AIOs and shift to “people want to explore more.” Which is exactly what Google has been saying.

Google has been talking more about people exploring the web and how that’s a human need. For example, in an interview with The Verge, Google CEO Sundar Pichai said, “I think part of why people come to Google is to experience that breadth of the web and go in the direction they want to…”

I think Google is talking about things in this way because they legitimately know that’s how people operate and behave online. And also, it’s going to be way cheaper.

If Google’s AI Mode were part prompt and part portal instead of being 100% prompt-focused, it would solve so many issues for Google.

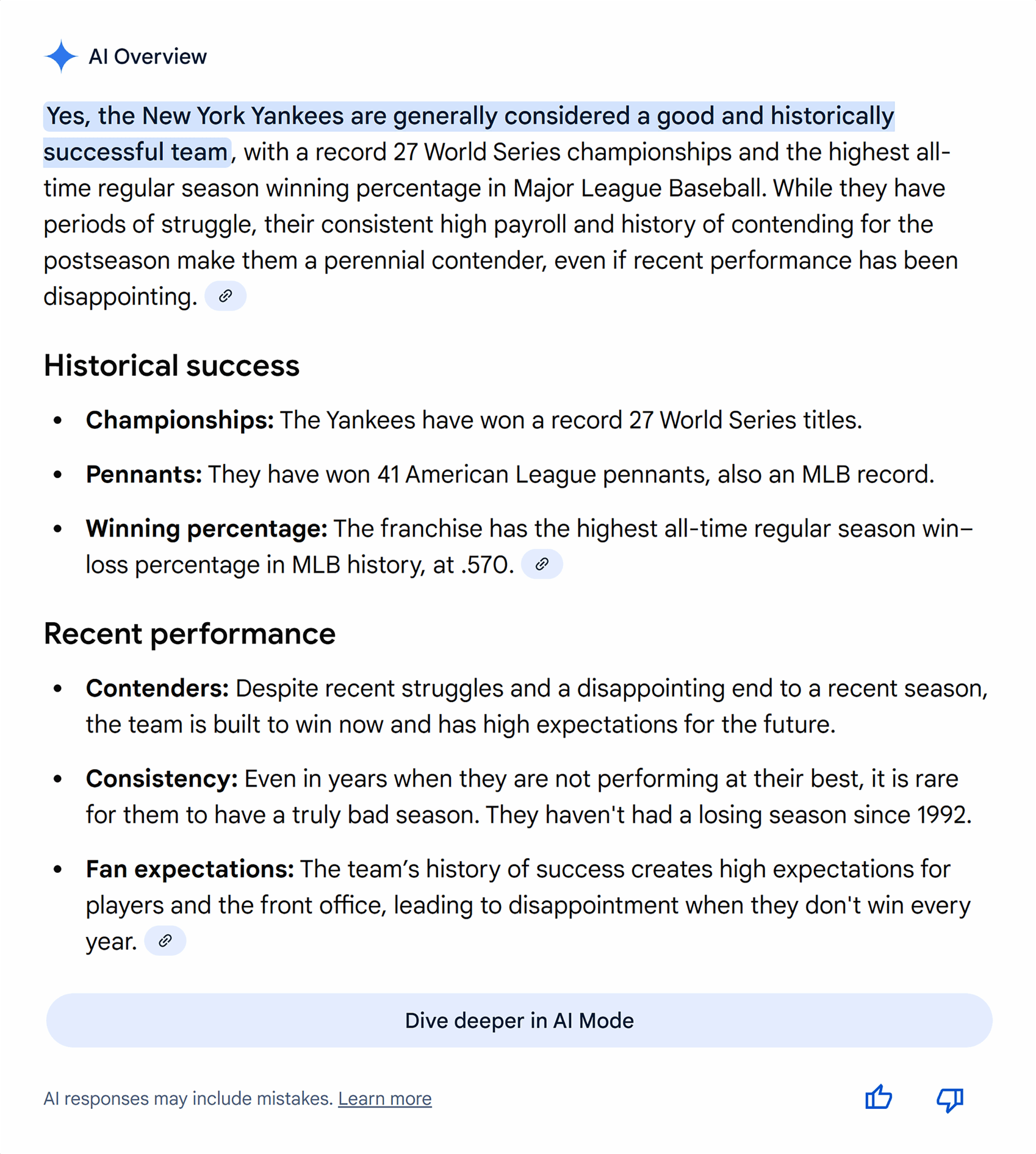

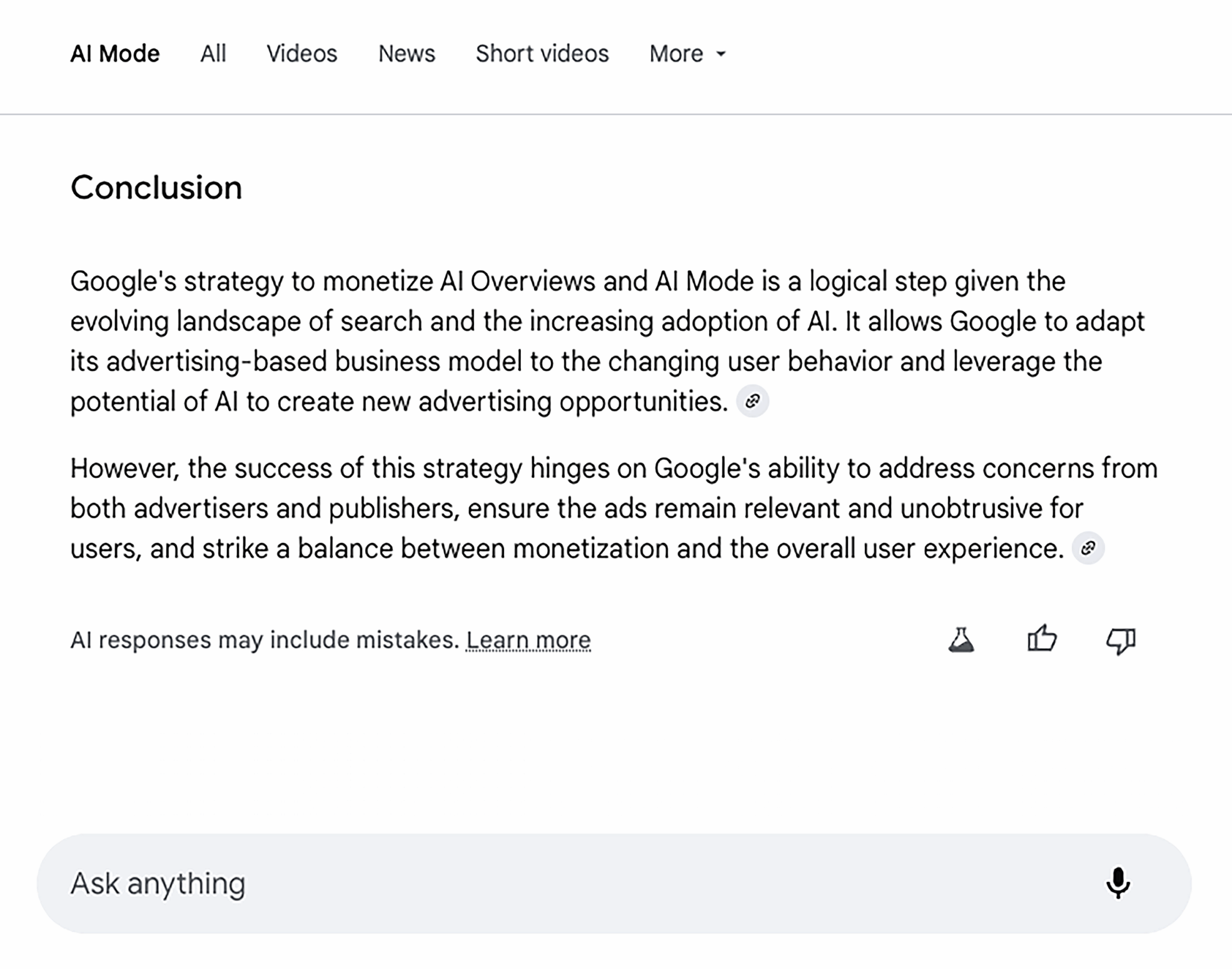

If I ask AI Mode to tell me about the history of the New York Yankees, I get this typically long, undynamic summary:

Imagine if I got a shorter summary accented by all sorts of entry points to see videos about the history of the Yankees, images of the team throughout history, a list of some podcasts on the topic, and more. Wouldn’t that be way more dynamic?

It would also be cheaper. How? Because then I would engage with the full output and follow-up prompts—and not by default, but by desire.

I might read the summary, see a video card, and watch a YouTube video, then come back to AI Mode with more questions about the team. My follow-up prompt would then be more purposeful and not just a knee-jerk reaction.

Which would make sense if I knew I had to view an interstitial ad before asking the next prompt.

After all, I’m not going to sit through some ad just to ask a brainless follow-up prompt. If, however, the follow-up is purposeful, then sure, show me an ad.

Deepening the knowledge scheme of the user results in more purposeful prompts, which cuts down on costs and makes the follow-up prompt more intentional. That’s better for both the users who have to sit through the ads and the advertisers who want you to click on their ads.

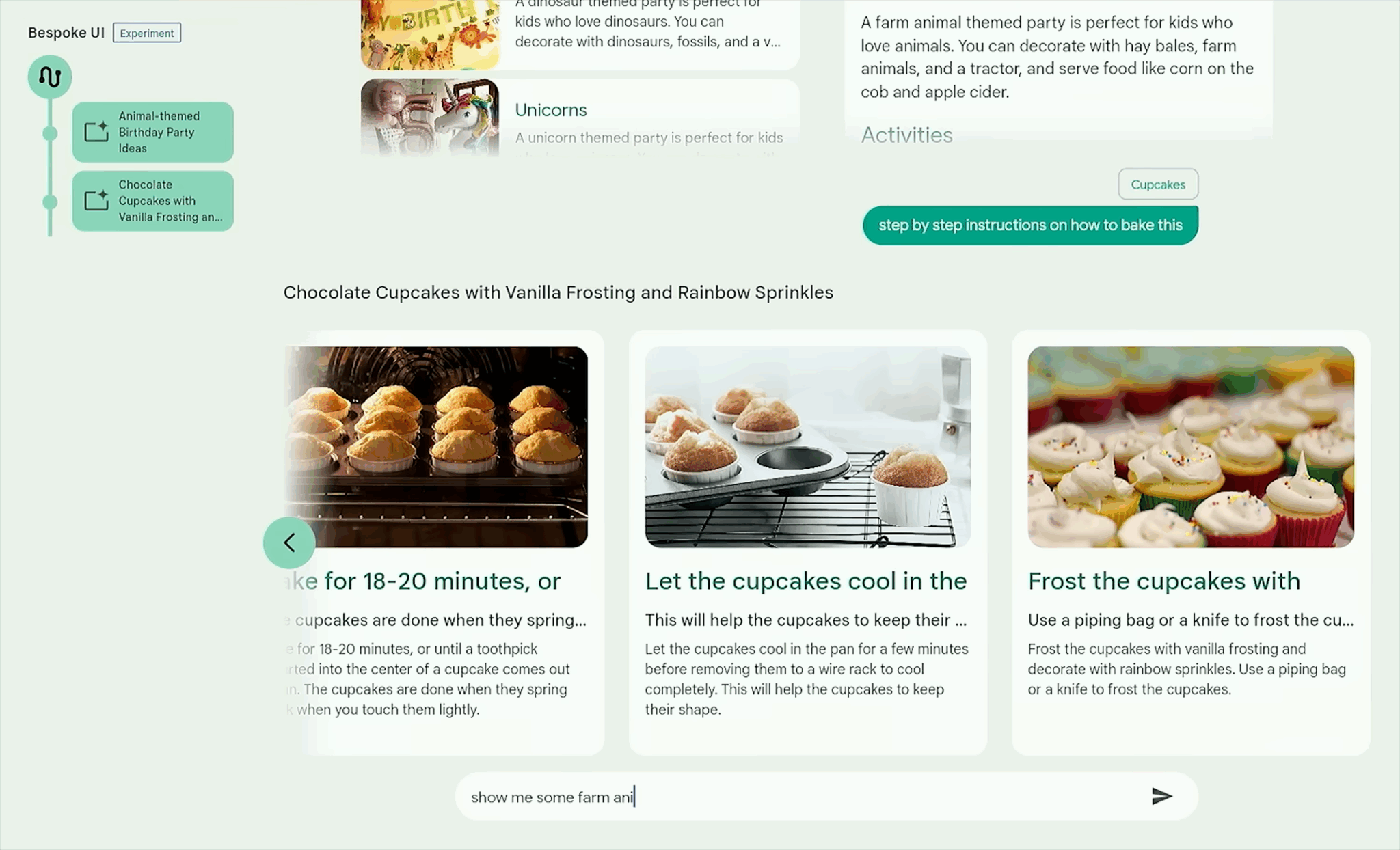

That scenario isn’t crazy. It’s a form of what Google already showed us when they first demoed Gemini.

Here’s a screenshot from that demo:

What you’re looking at is basically a knowledge portal. It’s a custom multi-modal response whose format aligns with the query/prompt and that allows for follow up.

It’s similar to what I described earlier, with AI Mode being part prompt-based and part content portal.

This is where the content starts to balance out. If what I am saying occurs, and AI Mode morphs from linear LLM into a content portal that encourages exploration (which is very much part of how we learn and function), then web-generated content has more room to thrive.

We’re seeing the web push back on LLMs with things like Cloudflare’s CEO looking to coerce Google (and beyond) by blocking AI crawlers (by default) and moving to a pay-per-crawl model. (The practicality of this is debatable, as doing so would block your entire site from all of Google currently.)

Compared to what Cloudflare (and others) have in mind, the market correction I’m talking about here will help bring that balance back a bit more organically (no pun intended). In an exploratory model, there is room for AI output and there is room for exploration entry points. This gives more opportunity to web content.

Does it return it to what it once was? No. Thank God. (More on that below.)

To summarize, the market correction that sees less hype around AI leads to a more profit-focused strategy for platforms like Google, which leads to a more balanced content experience across the platforms by encouraging more exploration.

Now what? What do you do with all of this? How does this help your actual strategy?

How to develop your visibility and marketing strategy for LLMs

You need space. You need space so that you can move, pivot, and adjust as everything around you changes.

Everyone is trying to make sense of everything. However, without understanding the full context, it’s hard to know what you’re looking at. The problem with investing in a strategy for dealing with and thriving with LLMs without the context we explored here is that it can pigeonhole your activities.

In other words, my biggest piece of advice for you is to leave yourself the space needed to allow everything to play out.

If you hedge your bets, you’ll be in a better position once the inevitable market corrections occur. Imagine a team that sees the LLM ecosystem as it is now and determines that this iteration of LLMs and search is here to stay now and forever. Everything from their strategy to their allocation of resources would become completely stuck as LLMs and the ecosystem around them evolved.

Accounting for the upcoming adjustments and doing your best to directionally understand where things are headed is invaluable. No one has a crystal ball and can tell you exactly how everything will play out. I think certain inevitabilities and corrections must happen, but I can’t say when and how they’ll happen.

Without accounting for inevitable shifting, you’ll just end up feeling stuck and endlessly trying to adjust to change in the ecosystem. However, if you can account for the fact that there will be adjustments, you can construct your strategy and team in a way that isn’t over-invested in the here and now and can move with the inevitable changes coming down the line.

Strategic tips:

- Think about your content production more conceptually. If you’re just trying to drive traffic by getting listed in citations (if that even works), then what happens when there’s a shift that upends how citations are placed and even accessed?

- Do more than one thing. If the reason why you want to engage in any given activity is simply to drive traffic, I don’t recommend doing it (as a rule, and there are a lot of exceptions to the rule). What drives traffic today may not even exist tomorrow. In an ecosystem that will inevitably change, dedicating too many resources to an activity that is simply a distraction, is risky.

If, however, that activity has the potential to not only drive traffic but also do other things for you, such as build authority, generate resonance among an audience, build up your web presence, position your brand in a certain way, and so on, then go for it. Now, if the traffic potential changes, you’re not stuck in an activity that has no value for you. You may still want to pivot and adjust, but you won’t be wasting your time and money in the interim.

- Be balanced. You have folks on the one side screaming that SEO isn’t dead. Then you have folks screaming, “do brand—performance marketing is dead.” The truth is somewhere in the middle. Find a strategy that allows you to develop an extremely strong web presence (a lot of which is based on strong brand marketing tactics that develop connection and audience resonance). At the same time, hunt for and take advantage of performance opportunities.

Your strategy should be like a homepage. Do you want your homepage to have no ability to emotively connect with your audience? No. Do you want your homepage to not explicitly discuss what you offer and appropriately encourage conversion? Also, no.

Your approach to SEO and LLM visibility should be the same.

The web has changed, and a wider, more substantial approach to brand for increased web presence is a must (which has been difficult for a lot of performance marketers to really appreciate). At the same time, there are opportunities to perform better (and even more immediately), and you should capitalize on them.

The brand work you do should set you up to perform better and without as many costs or resources. The more you can integrate the two sides of marketing, the better you’ll align with where I think things are headed, and without locking yourself in as things change.

Don’t expect a return to peak search traffic

I want to end with one point. While I don’t think the traffic construct LLMs currently create is healthy, and while I do think there will be a correction, I do not think we’re ever going back to the “good old days.”

And that’s a good thing, because, to quote Billy Joel, “the good ole days weren’t always as good as they seemed.”

There’s already been a market correction to content. If you’re still of the opinion that this correction (which has resulted in less traffic to many sites) is the fallout of AI tech acting improperly, I have news for you: It’s also the result of performance-focused content not addressing real user needs and the internet user pushing back on the expectations of what web content should be.

I don’t believe it would be good for the internet as a whole to end up back where we were with 100 pieces of content on the same topic, each not offering any real differentiation or value from the next.

Yes, I do think more exposure for web content is inevitable. Mainly because it’s human nature to explore and build knowledge schemes.

I do not, however, think it will be as it was before.

The web is narrower than it was, and it is not going to exponentially widen, in my opinion.

The opportunities will be more selective, even as LLMs evolve.

Value-based content with actual substance and genuine differentiation will find space. The web is heading towards being a more “specific” place, and not only can nothing stop that (since it aligns with what people want and how they consume content now), but nothing should stop it because it’s a healthy evolution, albeit painful at times.

Recent Comments