AI progress stalls for SEO tasks despite wave of new models

Recent AI model releases in the latter half of 2025 have not improved at performing SEO-related tasks.

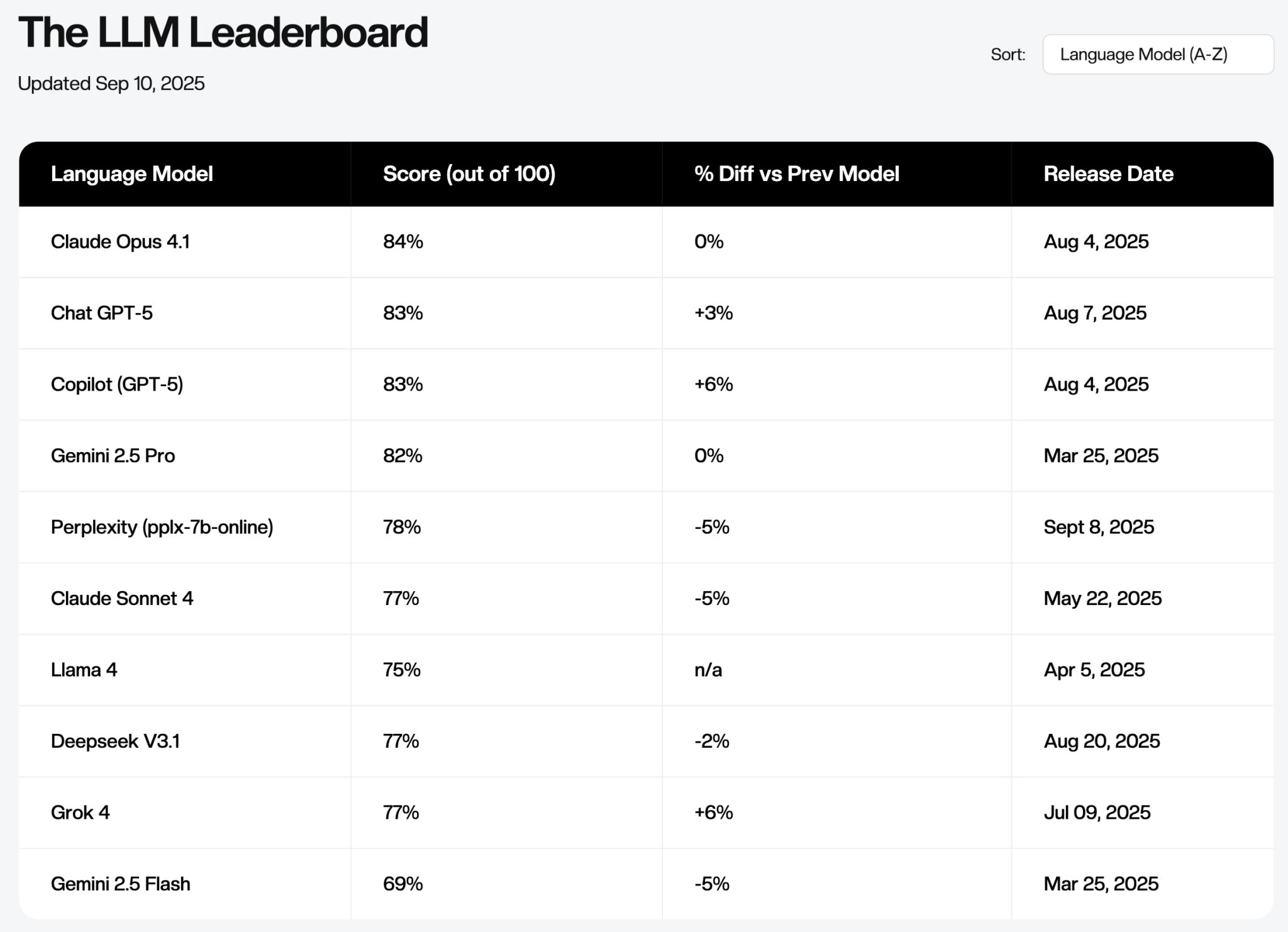

TL;DR: What you need to know about the LLM benchmark

- Claude Opus 4.1 remains the best language model for performing SEO-related tasks like technical SEO, localization, SEO strategy, and on-page optimization.

- ChatGPT-5 has improved in our benchmark despite the public’s negative reaction to its initial release.

- Copilot, which leverages GPT-5, is as performant as OpenAI’s model. This is a major upgrade as it previously underperformed.

- Gemini 2.5 Pro is a strong third option. It has the most potential impact for SEOs and marketers due to the base product integration (Gmail, Sheets, Slides, Docs) and AI-focused modalities that push its utility even further (Opal, NotebookLM).

The AI SEO Benchmark

In April, Previsible launched the AI SEO Benchmark, a structured effort to evaluate how effectively large language models (LLMs) can perform real-world SEO tasks. This study was focused on answering two core questions:

- Can AI reliably perform SEO tasks at an expert level?

- As these models improve, will their utility change how marketers should resource for SEO and GEO tasks?

To answer these, we curated a comprehensive set of questions across multiple SEO disciplines, content strategy, on-page optimization, link building, and technical SEO. These questions were developed by a team of seasoned SEO professionals with 10+ years of experience in their respective specialties.

We then ran leading LLMs through this battery of questions, scoring their responses out of 100. This benchmarking approach mirrors how AI performance is tested in fields like software development, mathematical reasoning, and logic-based tasks.

Initial findings

Our first benchmark in April delivered impressive, albeit unsurprising, results:

- LLMs performed well across content-focused SEO tasks like keyword strategy and metadata creation.

- However, LLMs struggled with technical SEO, where precision and predictable thinking are critical.

A new wave of models

Since then, the landscape has changed dramatically. Nearly every major AI provider has released a new model (with the notable exception of Meta’s Llama). With this influx of updated capabilities, we’ve re-run the benchmark and refreshed the leaderboard.

So how do the latest models stack up? And what does this mean for how SEO teams allocate time, tools, and talent?

In the next installment, we’ll share updated scores, performance breakdowns by SEO discipline, and implications for marketers.

A lot has changed since April, so let’s take a look at the Leaderboard now that nearly all major AI firms have released new models (except for Llama).

AI SEO Benchmark

The benchmark has seen some movement but hasn’t broken through the ceiling of what was possible in April.

If you’re not a trained SEO, I’d be extremely cautious about trusting LLMs to perform SEO tasks.

In researching this post, we reached out to the SEO community for examples of AI run amok.

Here are a few examples:

- When I first started using AI for SEO, it found 404 errors for URLs that didn’t exist, which AI claimed had backlinks. I presented these findings to the dev team and management as some sort of big “win.”

- I needed to perform a rank drop analysis for a large site with a short turnaround time. I ran the analysis through ChatGPT and was impressed by the categorization and the insights. The team was excited and wanted a deep dive, further analysis, and a presentation of the findings. When I dug a little deeper, all of the underlying “analysis” turned out to be meaningfully off base, and I had to start over and looked foolish.

- LLMs do not comply with wordcounts; they don’t even understand them, so I’m led to believe. So, I ran a script that automated a couple thousand pages of HTML edits and the result was full paragraphs of content and essays in title tags (usual max characters 160!) that also cost way more than I wanted to pay for!

These are anecdotal experiences, but they come from professional SEOs. If you’re an executive who cares about search, you still need trained SEOs who can utilize LLMs properly.

Has AI progress slowed down?

For those who are not “AGI-pilled,” you’ve probably noticed the moderate pace of change this year. There is disruption, but it is mostly impacting the hype bubble, with ChatGPT-5 notably underperforming after its debut.

That isn’t surprising based on what Ilya Sutskiver told Reuters last year about the “scaling up pre-training—the phase of training an AI model that uses a vast amount of unlabeled data to understand language patterns and structures—has plateaued.”

AI will continue to progress. This benchmark focuses on current utility businesses.

If these tools aren’t providing value or efficiency in our current workflows, what good are they? Google has been making gains in that area.

Google is the dark horse

A year ago, I had written off Google’s early Gemini models. As an early user, the experience was underwhelming and, frankly, unusable. However, my perspective has completely shifted with the release of Gemini 2.5 Pro.

Gemini 2.5 not only performs impressively in our benchmark, but it’s also deeply integrated across the Google ecosystem. That’s where its true advantage lies.

I can now draft an email that automatically understands the context of documents I’ve created in Google Drive, reference meetings from Calendar, or pull insights from Google Docs and Sheets, all within a single interface. That’s a real, seamless utility that no other LLM currently offers at scale.

While many LLMs struggle to build a sustainable moat, Google already has one: ubiquitous data integration. The ability to retrieve and act on relevant information across all Google products is a strategic advantage that’s hard to replicate.

Is it perfect? Not yet. However, if the pace of product improvement continues, Google could quietly become the most dominant player in applied AI.

Applying the Benchmark: Where AI stands today

We built this benchmark to be a living tool, something we’ll continue to update as new models are released and capabilities evolve. So where do things stand as of September 2025?

Can AI reliably perform SEO tasks at an expert level?

No. Despite major advancements in LLMs, most still lack expert-level execution, especially in areas requiring nuanced strategy, technical precision, or systems thinking.

Will model improvements change how marketers resource SEO and GEO functions?

Not meaningfully. We’re seeing incremental gains in speed and support for certain tasks, but not enough to warrant a full shift in team structure or investment strategy. The utility lies in efficiency gains, not automation at scale.

In short, don’t expect ChatGPT or Gemini to replace your SEO team. Expect them to enhance it when used wisely.

AI still disappoints on complex tasks. But the gap is closing.

Stay tuned to the benchmark. More importantly, start leveraging these tools before your competitors do. Early adoption isn’t just a productivity boost – it’s a strategic advantage.

Recent Comments