7 hard truths about measuring AI visibility and GEO performance

Fair warning: This article may make some people who’ve been hyping AI visibility tools uncomfortable.

After 18 years in the search industry, however, my professional integrity doesn’t allow me to stay quiet.

I have zero agenda here. Many of the misconceptions discussed below actually benefit me, both as the co-founder of an AI visibility tool and as someone who offers GEO services.

Over the past few months, many claims have been shared as facts that simply aren’t accurate. Let’s clear things up.

1. AI search didn’t kill Google search

Quite the opposite.

It doesn’t matter how many news sites publish clickbait headlines for traffic, how many VCs hype AI search because they’ve invested in startups, or how many AI visibility tools highlight it in their pitch decks to attract clients.

That doesn’t make it true.

What does? Data.

Here are a few examples:

- Semrush’s latest study, which analyzed more than 260 billion clickstreams, found that ChatGPT usage hasn’t reduced Google searches. It has actually increased them. And before you claim Semrush is biased toward Google, remember that its product also includes AI search tracking.

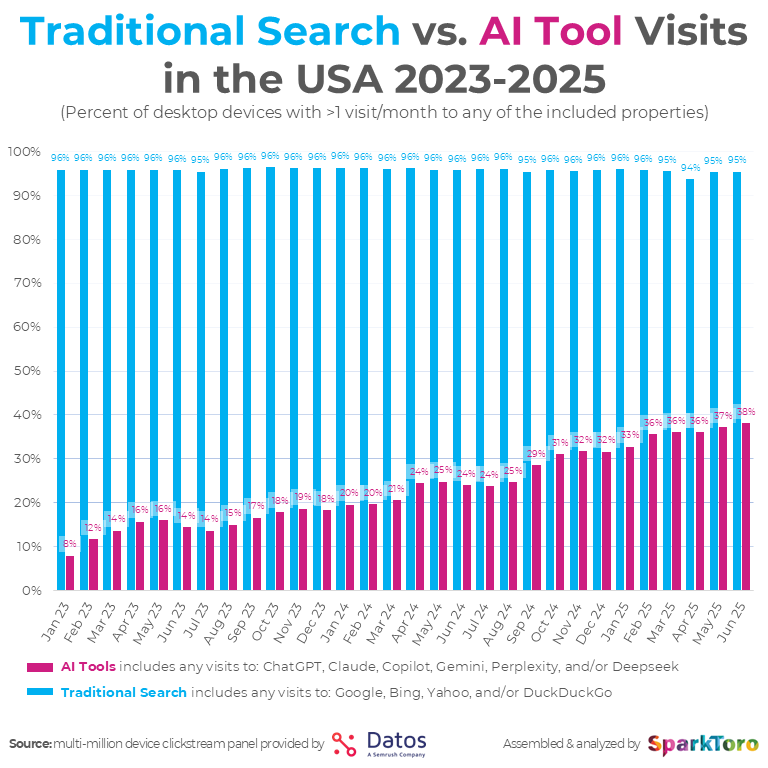

- Datos’ State of Search Q2 2025 report, created in collaboration with Rand Fishkin, CEO of SparkToro, shows Google holding a dominant 95% market share.

How is it possible that ChatGPT’s user base doubled over the past six months, surpassing 800 million users, according to OpenAI, while Google’s overall search volume has barely declined?

OpenAI published a report in September showing how people actually use ChatGPT.

Only 21.3% of conversations involved seeking information.

Within that group, just 2.1% focused on purchasable products, while 18.3% were about specific facts or details.

That’s the only slice relevant to brands trying to reach potential buyers.

Even then, some of those “searches” come from users who already know which brand they want, meaning it’s not a true discovery moment.

Another factor to consider is the user journey.

If I ask ChatGPT, “Which car insurance company do you recommend?” and it names a few brands, my next logical step is often to Google one of them to visit the website.

Like it or not, for many prompts, the website is still the final destination.

That may change now that OpenAI has launched its own browser.

But as of today, the reality is this: The overall search market is expanding.

AI hasn’t eaten Google’s share. It’s enlarged the pie.

And for anyone saying, “Everyone I know uses AI chatbots. Nobody Googles anymore.”

You and your friends are not the whole market.

2. No AI visibility tool can actually get you into AI answers

Where do I even start? History, as always, repeats itself.

What’s happening now feels like time travel back to the early days of the SEO industry.

Back then, the first SEO monitoring and intelligence tools promised things like, “We’ll get you to the top of Google.” Sound familiar?

The core question is the same now as it was then: Who’s actually optimizing?

Just as there’s no tool that can do SEO for you, there’s no tool that can do GEO for you.

Why?

Because, as with SEO, many of the things that actually move the needle can’t be automated by software.

A tool can help with parts of the GEO process. It can clearly belong to the GEO category, and it can surface data, insights, and recommendations.

But it can’t replace the thinking, judgment, and decisions that make those insights matter.

The actual execution of optimization, the actions that lead to your brand being mentioned by an AI model, is done by humans, either your in-house SEO or GEO team, or an external agency you work with.

Here are a few examples:

- Is the software planting brand mentions on external sites? If so, please explain how that’s possible without hacking websites.

- Is the software editing text on your site directly? I’d love to see a brand that gives any SaaS tool writing permissions to its CMS. And even if it only suggests changes, are you really going to copy and paste them blindly without checking whether they conflict with SEO principles? LLM-friendly doesn’t always mean SEO-friendly.

You get the point.

Many AI visibility tools publish slick case studies with titles like, “How we increased brand mentions in LLMs by X%.” That framing is a marketing tactic that claims ownership of the final outcome.

Yes, the software may have helped, but it didn’t do the work. You’ll never see that disclaimer in pitch decks designed to maintain high conversion rates.

It’s like a bottled water brand running a testimonial from a healthy person saying, “I drink this every day.”

That may be true, but is the person healthy because of the water, or because they eat well, exercise, sleep enough, and think positively?

When you see a GEO case study published by a software company, remember this: There’s always another side.

It’s the one actually executing the work, much of which the tool itself never recommends.

3. No one really knows the real search volume of prompts

As much as we’d all like to claim we have this data, no one does.

OpenAI and other LLM companies don’t share public, live usage data comparable to what you’d find in Google Analytics or Search Console.

That information simply isn’t exposed.

As a result, no tool or service provider can know the true search volume of prompts, unless they’ve been given access to a private sample of user data, which wouldn’t be representative.

Instead, most platforms build estimates using third-party datasets, clickstream panels, projections, or shared user logs, then apply extrapolation models to forecast relative popularity.

That approach can provide a rough directional view, but it’s still just that: a forecast, not a fact.

So the next time you see a chart showing “prompt volumes” for ChatGPT or Gemini, treat it accordingly.

It’s an educated guess, not an absolute truth.

Get the newsletter search marketers rely on.

See terms.

4. AI visibility can’t be measured like search rankings

Unlike traditional search engines, which produce largely deterministic results by ranking indexed pages, LLMs generate answers in a probabilistic way.

Each response is created on the fly based on likelihood, not fixed rankings.

While search results tend to look similar regardless of who the user is, AI chatbots generate different answers for different people because the probabilities behind each response are influenced by the individual user and their context.

Here’s what most people don’t realize: The algorithms behind LLMs are rewarded for guessing rather than admitting, “I don’t know.”

So when you ask ChatGPT, “Which car insurance company do you recommend?” it doesn’t actually know the correct answer, because there isn’t one.

Instead, it produces a response that’s statistically most likely to sound right to you. For someone else asking the exact same question, the model might generate something entirely different.

We’ve all seen it happen. ChatGPT confidently provides an answer, sometimes even citing nonexistent sources.

That phenomenon is known as artificial hallucination, and it’s one of the main reasons large language models can’t fully replace traditional search.

When users are looking for information, especially with commercial intent, they want certainty and objective correctness, not probabilistic storytelling.

Yes, if you’re logged into Google, your results will be slightly personalized, but the variation between users is minor.

With ChatGPT, the variation is much larger because the output depends on everything the model knows about that user, from prior chats to contextual history.

So here’s the real question: If every response is generated in real time and shaped by user context, how can monitoring tools claim to know exactly when and where a brand appears?

From what I’ve seen, there are only two main methods in the market, and unfortunately, 99% of companies rely on the first.

The traditional SEO monitoring model: Averaging results across many people

This approach mirrors the “wisdom of the crowd” model from the early SEO world.

It collects large volumes of data points from multiple sources, including:

- Third-party browser extensions that track real users.

- Clickstream panels.

- Companies that sell aggregated usage data, then combines them into a single average.

While this method provides a broad view of general brand visibility, it’s context-blind.

It treats every user the same, smoothing out the nuances that actually define how a specific audience interacts with AI.

The AI visibility monitoring model: Sampling results within a persona

This method recognizes that AI is non-deterministic, meaning it can produce different answers to the same question.

Instead of gathering random data, we at Chatoptic focus on a specific persona and run repeated inferences for that exact profile.

By analyzing the frequency of those results, we identify the stable mode, the most consistent, high-probability answer.

It’s not a flat average across everyone. It’s a more precise reflection of what a specific target user is likely to see.

Bottom line: No method offers a complete reflection of reality.

Every tool in this space operates within the same limitations. The difference is that we’re upfront about it.

5. What’s outside your site matters more for GEO than what’s inside it

Most GEO tools and agencies focus on optimizing a brand’s website content and technical setup.

Yet that’s often the factor with the least impact on the most important GEO KPI: whether your brand name actually appears in AI answers.

Why?

Because, just as backlinks in SEO give Google signals of credibility, external brand mentions teach large language models about relevance and authority.

Think about it this way: If you ask a random lawyer who the best attorney in town is and he says, “Me,” you’ll probably want a second opinion.

That’s similar to a brand writing about itself on its own website. But if several other people independently mention the same name, that’s the signal that really matters.

An Ahrefs analysis found that brand web mentions show the strongest correlation with AI Overview brand visibility, with a correlation coefficient of 0.664.

That suggests models rely far more on off-site context than on what’s written on a brand’s own website.

Some sources carry more authority than others. Certain domains are weighted heavily during model training, while others barely count.

A recent Semrush study found that Reddit and LinkedIn rank among the top five most-cited domains across ChatGPT, Google’s AI Overviews, and Perplexity.

The key takeaway is simple: If you want your brand to appear in AI answers, you can’t ignore the off-site layer.

Finding those sources is the easy part. Most tools can show you where to be.

Earning your place there, in a way that sounds natural and trustworthy, takes human effort, as discussed in Point 2.

So why do so many tools still obsess over on-page optimization?

Because it’s the one area fully under your control. It’s far easier to tweak your own website than to influence what others publish about you.

That’s why most AI visibility tools center their dashboards and recommendations on “optimizing key pages.” It’s convenient, scalable, and measurable.

Let’s be honest: Those optimizations mainly help improve how your pages rank among cited sources in AI answers, and make performance graphs look better.

But is that really the KPI that counts? Keep reading.

6. The most important KPI in GEO is your brand being mentioned within LLM answers

Appearing as a cited source in an AI answer may look good on a dashboard, but does it have any real marketing or business value beyond simply knowing, “I was cited”?

What about traffic from citations?

Six months ago, Matthew Prince, CEO of Cloudflare, shared several data points that put this into perspective.

Ten years ago, Google crawled about two pages for every visitor it sent to a publisher.

Six months ago:

- Google: 6:1

- OpenAI: 250:1

- Anthropic: 6,000:1

More recently:

- Google: 18:1

- OpenAI: 1,500:1

- Anthropic: 60,000:1

In other words, for every 1,500 pages crawled by GPTBot, only one visitor clicks out of ChatGPT to an external site.

What about AI Overviews?

Adam Gnuse recently published an analysis that examined performance data from more than 20,000 queries across multiple industries.

The data showed that even top placements within Google’s AI Overviews behave more like a traditional Position 6 result in terms of clicks.

Visibility may be high, but click-through rates drop sharply, and by the time a brand appears fourth or fifth in an Overview, engagement nearly disappears.

The takeaway was clear: AI visibility does not equal traffic. Citations within AI Overviews consistently underperform compared to traditional blue links.

In a TechCrunch interview, Reddit CEO Steve Huffman said AI chatbots are not a meaningful traffic driver for Reddit today, even though the platform is one of the most cited sources in LLM answers.

Google Search and direct visits remain Reddit’s dominant traffic sources by a wide margin.

So no, traffic isn’t zero, but it’s hardly a channel you can rely on to drive visitors to your website.

Citations and clicks are nice to have, but they’re not the primary goal.

What truly matters, the holy grail of GEO, is having your brand name appear directly within the AI’s answer.

7. GEO practices without proper SEO alignment can backfire

Another reminder of Point 2.

Let’s break it down with an example. Imagine you run a company that sells accounting software.

On your blog, you have an article titled “How to choose the best accounting software for a small business.”

It ranks well on Google and brings in about 2,000 organic visitors per month. Google rewards it because of strong on-page signals and credible off-site factors.

One day, you start using an AI visibility tool that suggests optimizations to increase your brand’s presence in AI answers.

You implement the tool’s recommendations, and your exposure in ChatGPT jumps. You even begin seeing around 200 visits per month coming from AI chatbots.

The platform reports success. Your dashboard looks great. The graphs are up.

But here’s the catch: One of those optimizations conflicts with SEO best practices.

Because LLMs favor paragraph structures that make extraction easier, you restructure parts of the page accordingly. That change may help your AI visibility, but it also weakens the page’s SEO performance.

As a result, the article drops to Position 9 on Google, and organic traffic falls from 2,000 to 200 visits per month.

Now the numbers look like this:

| Before the optimization | After the optimization | |

| Traffic from Google | 2,000 | 200 |

| Traffic from AI models | 0 | 200 |

Yes, you “boosted your AI visibility,” but at what cost?

This isn’t a hypothetical example. I’ve seen it happen more than once.

As noted earlier, traffic isn’t always the most important metric. But unless you can connect AI mentions to brand search growth or conversions, that visibility doesn’t translate into business impact.

The problem is that most AI visibility tools measure success only within generative engines. They don’t account for your full search traffic mix or how these changes affect broader SEO performance.

So while their KPI charts may look impressive, the marketing KPIs that actually matter to your business may be quietly declining.

When search evolves, measurement must evolve with it

GEO is rooted in the search ecosystem we already know.

LLMs still rely on the open web, crawlers, and many of the same signals that shaped SEO for years.

In practice, however, visibility is now generated rather than listed, and what appears depends on context, intent, and who’s asking.

That difference matters.

Our research at Chatoptic shows that strong Google rankings don’t reliably translate into visibility within LLM answers, with only a 62% overlap.

That gap is why GEO requires its own analysis and measurement framework, not a recycled SEO one.

As with every major shift in search, the risk isn’t ignoring the past. It’s assuming old metrics still tell the full story.

Progress comes from questioning assumptions, testing reality, and adapting how success is measured.

If you’re considering working with a GEO agency, evaluating an AI visibility tool, or know someone who is, share this article with them.

It could save time, money, and a lot of unnecessary frustration.

Recent Comments